The classic Problem

The most common application of binary lifting is the following:

"Let $$$G$$$ be a rooted tree with $$$N$$$ nodes. For $$$Q$$$ queries of the form $$$(K, V)$$$ we want to find the $$$K$$$-th ancestor of $$$V$$$ in the tree."

One simple, yet quite inefficient way of solving this problem is to build for every node an edge towards its direct ancestor. Then, in order to solve a query we simply have to traverse $$$K$$$ edges. This approach has complexity $$$O(NQ)$$$. We can try improving it by adding some "jump-edges".

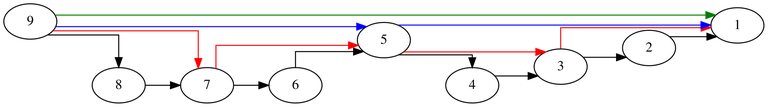

A jump-edge is simply an edge that starts from a node and is not directed towards its parent, but towards an arbitrary ancestor. These jump-edges will allow us to traverse large regions of edges in a single operation. Let's start by building jump-edges from each node towards its $$$2$$$-nd ancestor, $$$4$$$-th ancestor, $$$8$$$-th ancestor and so on. Note that in the above figure only a subset of these jump-edges is visible since otherwise it would clutter the image too much.

Let's define the length of a jump-edge as the number of normal edges that are skipped by traversing this jump-edge. For example, all the blue edges in the above figure have length 4. We can compute the destination of these jump-edges in a recursive manner by using already computed shorter edges.

More formally, we denote $$$far(h, i)$$$ = the $$$2^h$$$-th ancestor of $$$i$$$. This can be calculated easily using the following recurrence $$$far(h,i) = far(h - 1, far(h - 1, i))$$$. Now to find the $$$K$$$-th ancestor we can greedily traverse the longest jump-edge that doesn't pass the $$$K$$$-th ancestor. We can do this by splitting $$$K$$$ into powers of $$$2$$$ and then traversing the edges of corresponding lengths in order. For example, let's search for the $$$7$$$-th ancestor of $$$9$$$. Since $$$7$$$ = $$$4$$$ + $$$2$$$ + $$$1$$$, we can traverse the blue edge from $$$9 \to 5$$$, the red edge from $$$5 \to 3$$$ and the black edge from $$$3 \to 2$$$.

This approach has time complexity $$$O((N+Q)log_2N)$$$ and memory complexity $$$O(Nlog2N)$$$.

Link to the above problem and to a submission implementing this solution.

Can we do better?...

In this particular case, we can exploit the fact that the queries are given in an offline manner (We can read all queries at the beginning and solve them together). We will explore the tree using a DFS and we will maintain in an array all of the ancestors of the current node (including itself). To maintain this array of ancestors we can simply do the following: When going from a node to its son we push the son to the end of this ancestor array and when returning from a node to its father we remove it from the end of the ancestor array. As such, when we reach a node we can easily answer the queries related to it by accessing the array of ancestors. Code implementing this idea can be found here.

However, what could we do if we first had to answer a query before getting the next one? Unfortunately, in this case we are not able to improve the time complexity further than $$$O((N + Q)log_2N)$$$, however we can reduce the required memory.

One way of reducing the required memory so is to sacrifice time efficiency and change the base of the length of the jumps. Instead of precomputing jump-edges with power of $$$2$$$ lengths, we can precompute jump-edges of lengths that are powers of another integer $$$D$$$. Using this method we have time complexity $$$O((N + Q)Dlog_DN)$$$ and memory complexity $$$O(Nlog_DN)$$$. This may not seem like that much of an improvement, though I have used it time and again to fit unintended solutions in problems with extremely tight memory limits. A solution implementing this idea can be found here.

...without sacrificing execution time?

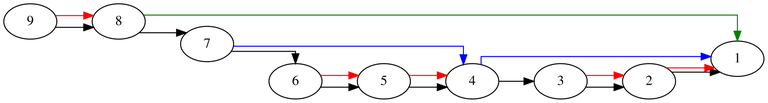

We can reduce memory to $$$O(N)$$$ without massively impacting the execution time. We can compute for each node its direct ancestor and a single jump-edge towards an arbitrary ancestor. When searching for the $$$K$$$-th ancestor we will try to traverse the jump-edge if it does not pass over the $$$K$$$-th ancestor, just like before. One way to choose the destination of these jump edges can be observed in the following picture:

Let's denote the direct ancestor of $$$x$$$ as $$$parent_x$$$. We will add jump-edge $$$x \to y$$$ only if the jump-edges from $$$parent_x \to z$$$ and $$$z \to y$$$ exist and have the same length for an arbitrary $$$z$$$. It is easy to see that we can compute all such jump-edges in $$$O(N)$$$ by processing the tree from top to bottom.

What is not so easy to see is why we can reach the $$$K$$$-th ancestor in logarithmic time by using these edges. The proof for this is quite long and uses skew-binary number systems, as such I will leave it as an exercise to the reader:) ( A bound of $$$3 \lfloor \lg(N + 1) \rfloor -2$$$ is shown in the original paper An applicative random access stack)

Source code implementing this approach can be found here.

Another way to do this is to choose $$$\frac{N}{logN}$$$ arbitrary nodes and create from them a virtual tree where there is an edge between $$$x$$$ and $$$y$$$ if $$$x$$$ is an ancestor of $$$y$$$ and there are no other chosen nodes on the path between them. We can compute jump-edges in this virtual tree using $$$O(\frac{N}{logN} * logN) = O(N)$$$ memory. To answer a query we find our closest ancestor that is part of this virtual tree and walk up to it (This ancestor is expected to be at a distance of around $$$logN$$$ edges since we chose the virtual tree nodes randomly). From here, we can use the jump-edges from the virtual tree to get as close to our $$$K$$$-th ancestor as possible. After there are no more jump-edges that we can use, we can return to using the edges from the original tree, since our target is expected to be at a distance of only around $$$logN$$$ edges.

Thanks to FairyWinx for suggesting this idea and I am extremely happy to see a nondeterministic method of achieving $$$O(N)$$$ memory. Here is a submission implementing this approach.

Computing the lowest common ancestor using binary lifting

Another common application of binary lifting is computing the lowest common ancestor(from now on we will abbreviate it as LCA) of $$$2$$$ nodes. In addition to precomputing our jumps-edges we should also compute for each node its depth. For simplicity, let's assume that we are using the original method to create jump-edges (the one using powers of 2). Let $$$x$$$ and $$$y$$$ be $$$2$$$ nodes to find their LCA we can apply the following strategy:

Let's assume that $$$depth_y \le depth_x$$$, otherwise we can just swap them.

Let $$$K = depth_x - depth_y$$$ and $$$z$$$ be the $$$K$$$-th ancestor of $$$x$$$ The LCA of $$$x$$$ and $$$y$$$ is the same as the LCA of $$$y$$$ and $$$z$$$, since the depth of the LCA is at most the depth of $$$y$$$ that is equal to the depth of $$$z$$$. We can substitute $$$x$$$ by $$$z$$$ and from now on assume that $$$depth_x = depth_y$$$.

If $$$x = y$$$ we can stop since their LCA is clearly $$$x$$$. If the direct ancestor of $$$x$$$ is equal to the direct ancestor of $$$y$$$, we can also stop since that direct ancestor is their LCA. Otherwise, let $$$K$$$ be the highest power of $$$2$$$ lower than or equal to $$$depth_x$$$. If the $$$K$$$-th ancestor of $$$x$$$ and the $$$K$$$-th ancestor of $$$y$$$ differ, then we can reduce the problem to finding the LCA of the $$$K$$$-th ancestor of $$$x$$$ and the $$$K$$$-th ancestor of $$$y$$$. Otherwise, if the $$$K$$$-th ancestors are the same, then from now on we should only consider smaller jumps.

The $$$O(N)$$$ memory approach is also compatible with LCA queries. The steps $$$1$$$ and $$$2$$$ are identical, as for step 3 since $$$depth_x = depth_y$$$ their only jump-edges have the exact same length $$$K$$$, so we can check if they lead to the same node or not. If they do not, then we can reduce the problem to finding the LCA of their $$$K$$$-th ancestors. Otherwise, we can reduce the problem to finding the LCA of their parents.

Link to a problem that asks for the lowest common ancestor and source code implementing this approach can be found here with $$$O(N\log_2N)$$$ memory and here with $$$O(N)$$$ memory.

Path aggregates using binary lifting

Another useful application of binary lifting is extracting information about paths on trees. For example, the following problem can be solved using binary lifting:

"Let $$$T$$$ be a tree with weights on the edges. For $$$Q$$$ queries $$$(x, y)$$$ we want to find the maximum weight of the edges on the path from $$$x$$$ to $$$y$$$"

To solve this problem we can maintain additional information on the jump-edges such as the maximum over all of the normal edges that are passed by this jump-edge. To extract the maximum weight on a chain $$$(x, y)$$$, we will first define $$$z$$$ as the LCA of $$$x$$$ and $$$y$$$ and split the path $$$x \to y$$$ into $$$x \to z$$$ and $$$z \to y$$$. To find the maximum on the path $$$x \to z$$$ we can simply split it into jump-edges, just like before, and compute the maximum over all of these jump-edges.

Unfortunately, binary lifting can't be extended to cases where the weights of the edges are updated or the structure of the tree is affected. For the former it is necessary to use heavy light decomposition, as for the latter it is necessary to use link cut tree. These algorithms are quite long and tedious to implement, so it is preferable to use binary lifting when possible.

Since I could not find a problem that asked for this directly, here is one that can be reduced to this and here is code implementing this idea.

Additional problems

- B. Lynyrd Skynyrd suggested by TheScrasse

- D. Cut suggested by TheScrasse

- Rmi Sum Zero suggested by SlavicG

- Apio Jumps suggested by FairyWinx

- E. Madoka and the Sixth-graders suggested by FairyWinx

- Towers 3.0 suggested by FairyWinx

Conclusion

I hope you all liked this article and I encourage you to share problems that can be solved using this technique and maybe additional tricks related to it that I have missed. Thanks for all of your suggestions:)

Amazing tutorial! This type of content should appear more on codeforces!

Thank you, stranger I have never met in my life.

Why you have ruin all fun stranger :(

Who

Asked

Why did you ping me , stranger ?

I was gonna say "Cares", but anyways, nice to meet you stranger

On catalog when?

Let me ping the catalog manager, -is-this-fft-

But it is not adamant blog!

Useful!

stfu furry

Great tutorial!

Additional problem: RMI20 Sum Zero

Geniosity sir

Thank you for this editorial

Can't wait for the next one <3

Additional problems:

If the queries are offline, you can answer most of their versions in O(1) each using a DFS and querying the stack of path to the root as appropriate when we enter the queried vertex.

Nice tutorial anyway :)

Amazing Tutorial. Thanks Alex.!!

Thank you for sharing this amazing tutorial!

yusful

Nice educational content like this are always welcome. Thanks a lot.

More not obvious problems for this topic:

You can also do binary lifting for linear memory in a slightly different way: Choose $$$\frac{n}{\log_2{n}}$$$ random vertices, build binary lifting only on these vertices, and then climb only this tree, and then jump on direct ancestors

https://mirror.codeforces.com/blog/entry/74847

problem

How to get the intuition for binary lifting in this problem?

Amazing tutorial, sir! I am 21 years old and I've just learned this technique! Survey: How old were you when you learned binary lifting?

It's common knowledge for 5th graders in China

(20)I am in my college years

These amazing tutorial helps us a lot

and also you guys who give additional problems with some more interesting technique,

I Thank you all!

Very neat trick! Thank you for sharing!

I'm not sure if this makes any sense, but this idea seems very similar to a Fenwick tree, except we allow ourselves to not take the full "prefix block" that we might be storing, by instead taking the parent pointer up by one. Perhaps this idea can be used for a simpler proof?

i am sorry to inform you that literally no one cares

can someone explain how the time complexity of dfs approach this one is O((N+Q))?

Another Problem

Another interesting problem.

One of the nicest binary lifting problem I have yet encountered

Also, binary lifting can be generalized to be used on any graph with out-degree 1 for each node. Here's a problem that uses this.

jeroenodb brought to my attention that the applicative random access stack was also described in this article by Urbanowicz.

By the way, the idea with picking $$$O(\frac{n}{\log n})$$$ edges and making a virtual tree on them with their own jump tables can be made deterministic (assuming the tree is static) by picking vertices whose height has a certain remainder modulo $$$\log n$$$. Which remainder? Well, just look on your tree and pick the one with the smallest amount of vertices, it will have no at most $$$\frac{n}{\log n}$$$.

Another randomized way that supports dynamically adding leafs is to follow skip-list approach, that is to make $$$\log n$$$ layers of virtual trees and for each vertex determine its number of layers by coin flips. Then, the average total size of virtual trees is

Though the implementation would be somewhat unpleasant, and the applicative stack is much nicer.

By the way, the bound of $$$3 \log_2 d$$$ for the applicative stack can be shown in a meaningful way.

Look at the picture from Urbanowicz blog:

You can see that it is a kind of self-repeating pattern, which we can describe as

Let $$$d_k$$$ be the largest possible amount of steps that you need, starting from some vertex in the block $$$A_k$$$ to reach one of its ancestors. Without loss of generality, one can say that

where $$$a_k$$$ is the largest possible amount of steps needed to reach some ancestor of the left-most vertex in the block $$$A_k$$$, and $$$b_k$$$ is the largest possible amount of steps needed to reach the right-most vertex in the block $$$A_k$$$ after starting in any of the block's vertices. In other words, if we do not start in the left-most vertex, and do not end in the right-most vertex, we will always need to pass through the splitting point between $$$A_{k-1} A_{k-1}$$$, hence $$$a_{k-1} + b_{k-1}$$$.

On the other hand, $$$a_k$$$ and $$$b_k$$$ may be expressed as

For $$$a_k$$$, this expression means that we step via $$$x$$$ to the leftmost vertex of the first $$$A_{k-1}$$$ block, then to the splitting point, then we utilize the pattern for $$$a_{k-1}$$$ in the second $$$A_{k-1}$$$ block. For $$$b_k$$$, it is optimal to utilize the pattern $$$b_{k-1}$$$ in the first $$$A_{k-1}$$$ block, reach the splitting point and do one more step to reach the right-most vertex of $$$A_k$$$.

From this we get that

Then we notice that after $$$k$$$ steps we have $$$d = 2^{k+1}$$$ vertices, so $$$d$$$ and $$$k$$$ relate as $$$k \sim \log_2 d$$$.

Nice! I also came across Urbanowicz's tutorial a while ago, and it felt weird that it got so little attention at that time. When I thought about it, it seemed to relate very closely to a segment tree/fenwick tree, so I naturally thought about making it support updates as well.

It's not hard to see that that is possible and achieves $$$O(\log n)$$$ complexity per update (given that the input "tree" is a chain), as one value changes at most $$$O(\log n)$$$ data in our structure.

The above can also be extended for general trees, if you label the vertices in BFS order (one value changes at most $$$O(\log n)$$$ ranges in the BFS order, as all vertices for a given link and a given "order" are contiguous and lie on the same depth in the tree. However, this ultimately turns out to be more limited and with similar complexity as other methods (i.e. HLD), so it's not as useful. Maybe you can somehow exploit the fact that there aren't many different levels with many vertices, but I haven't figured how.

For the LCA I found a different approach a bit more easy to understand. I don't know if the time complexity is better or no.

What we can do is store the depths of all the nodes in an array.

Suppose we want to find the LCA of 2 nodes

xandy.x. Let it be theKthancestor and the ancestor be calledzyhas the same ancestor. We can do this by checking 2 things:y. If it is not we look for another value ofKyand we know both their depths we can check if it is really an ancestor or not just by finding the(depth[y] - depth[z])thancestor ofy. If this ancestor iszthen we might have an LCA.Now to make sure that we have the Lowest Common Ancestor in a reasonable time complexity we can use binary search. We binary search for the value of K and then we can check all this stuff I believe in

O(logn)time complexity.Well I don't know what is the time complexity of this solution but I think it will be

O(Q * log(depth[x]) * logN).I am not sure about the time complexity values though. Do correct me if I am wrong.

Yeah ,i thought the same thing,but it's slow