Disclaimer

I found only one article about counter Fenwick Tree (Russian only), which is supposed to expand the capabilities of an ordinary Fenwick tree, and couldn't figure it out. However, recently I came up with an idea how to use this trick with binary liftings to modify the Fenwick tree and use it, for example, to answer range minimum queries. In addition, I will tell you about another tree that is quite similar to the Fenwick tree.

Our problem and that trick

Standart Fenwick Tree works only with reversible operations (sum, xor), because we can get answers fast($$$\mathcal{O}(\log_2{n})$$$) only on prefixes. It turns out that for the same complexity we can get answers on any intervals.

To better understand what will happen next, I recommend reading this article. In short, it says that for each vertex you need to store the maximum jump. These jumps are set as follows: if the length of the jump from the previous vertex is equal to the length of the jump from the vertex to which we jump from the previous one, then our current maximum jump will cover these two, otherwise the current jump simply leads to the previous vertex. If you look closely at the jump lengths, you can see that these are numbers that are equal to $$$2^n - 1$$$. Now, to jump from one vertex to another, you need to go by the maximum jump whenever possible, otherwise just one vertex up. Intuitively, it is clear that the number of jumps is $$$\mathcal{O}(\log_2{n})$$$, however, a strict proof it is quite complex and large.

How to use it in Fenwick Tree

Note that in the Fenwick tree we do almost the same thing! Indeed, we are literally jumping over segments of the size of $$$2^n$$$.

Let's take a closer look at the algorithm for answering to the request. Let's say the right boundary of the query is $$$r$$$. If the segment for which the element with the index $$$r$$$ is responsible is included in our query, we process it and make a jump along the segment. Otherwise, we process one element and reduce $$$r$$$ by one. Unfortunately, we won`t split the query into $$$\mathcal{O}(\log_2{n})$$$ segments. For example, if we choose $$$r = 2^n - 1$$$ and $$$l = 2^{n - 1} + 1$$$, we will process $$$\mathcal{O}(\log_2^2{n})$$$ segments. However, Implementation is very fast (maybe tests are not good enough).

Also this approach has other disadvantages. Firstly, it is quite difficult to generalize it to larger dimensions. For correct corner case transitions, it is necessary to maintain Fenwick trees of smaller dimensions. For example, for such a 2D structure, it is necessary to maintain two separate lists of 1D Fenwick trees (by columns and rows). For 3D, you need 3 lists of 2D trees and 3 matrices of 1D trees. The implementation turns out to be difficult, with a large constant, it is better to use a down Segment Tree. Secondly, there are difficulties with updates. Since a vertex can have $$$\mathcal{O}(\log_2{n})$$$ children in the Fenwick tree, updating each ancestor can take $$$\mathcal{O}(\log_2{n})$$$. As a result, an extra logarithm is added to each dimension. For a 2D tree, the complexity is really bad $$$\mathcal{O}(q\log_2^6{n})$$$.

Next tree, please

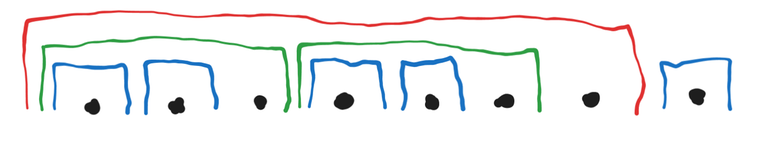

Let's try to make our own tree based on the idea of jumping. It will turn out something like this:

The rightmost element of the segment is responsible for the value on it. Note that now each vertex has only two children, and in general this tree is a forest of ordinary Segment Trees, but in a special way ordered. The construction of the tree is the same as the construction of maximum jumps, we answer to queries in the same way. However, now we can easily handle updates at the point.

Unfortunately, with this approach, we also cannot avoid difficult implementation at larger dimensions. Also, there are now additional problems if we want to make the tree implicit. The fact is that we must be able to get the length of a certain segment very quickly. The only algorithm that came to my mind works for $$$\mathcal{O}(\log_2{C})$$$, where $$$C$$$ is the maximum coordinate. At each step, we look for the maximum such $$$n$$$ that $$$2^n - 1$$$ is less than the index of the current element, and run the same algorithm from $$$idx - (2^n - 1)$$$. If $$$idx = 2^n - 1$$$, then this is the desired length. Thus, a logarithm is added to the complexity.

In any case, I think that such idea of range queries deserves attention. I hope you enjoyed the article.