Hi all, we participated in challenge24 as aimfund team (zeliboba, ArtDitel,Zhukov_Dmitry), took 6th place and here is my writeup. We really love challenge24 and 24-hour format, thank you guys for making it, and we are waiting for the next year. But I should say that writeup will be mostly negative because of overall impression.

UPD Psyho's 1st place writeup with solutions: http://mirror.codeforces.com/blog/entry/19023

.

.

The first negative moment was not the fact of a postpone, but the unknowing of exact schedule and orgs silence. We think it happened because of sponsor issue.

Next strange stage was the onsite network test and team placement. There were no seating chart so we picked the random room with 3/5 russian teams. There were no test targets too, we had neither server ip nor checklist, the only thing we did was setting switch and checking power adapters.

At the contest day we came in the same room and waited for the start. It was really unexpected to find only 6 tasks in problemset, we thought more would come, but it didn't. By the way, submit interface didn't work for the first 2-3 hours.

A, D and E were pretty straightforward tasks and almost every team solved them. Tests for 'E' were broken, and it took 2-3 hours to fix them.

F task meant to be online game with all teams competing each other in 20 rounds. But what we found was: incomplete statement, it looked like someone forgot to include 2 or 3 pages, and offline server, which went online only after 6-10 hours of competition. Next 6-8 hours jury was trying to fix it and finally they changed literally the whole tasks 4 hours before the end! I think it was one of the worst moments of the contest. Last thing to mention is the scores, which appeared 3-4 hours after the round end.

C task was the best one, but it wasn't enough to save the contest. In this task you have assembly language with: in, out, mov, rmov, jmp, jz, jnz, jneg, add, sub, div, xor, mul. You were given assembly compiler, binary runner and 3 binaries (without asm code, so you should decompile or reverse them somehow) and the task was to optimize the size and run-time of these binaries. Sounds tasty. We started optimising first binary, because it had no input, only output. Overall idea was to optimize the algorithm and then make constant micro optimisations. For example in second binary you should implement the sorting algo.

But there was a problem too: the scoring system. You could spend hours writing good solution (nlogn head-sort or merge-sort), optimize initial binary by ten times and get... ONE point of 400. It happened because of strange formula and unknowing what input data is used by the jury. It is a big array or small? How many tests do they have? We implemented 4 different algorithms, combined them and got 50 points only.

# simple insertion sort

mov 0, array_ptr

read_loop:

in 4

jz 4, end

rmov 4, *0

mov 8, 0

mov 12, 8

sub 12, four

swap_loop:

mov 4, *12

jz 4, swap_end

sub 4, *8

jneg 4, swap_go

jmp swap_end

swap_go:

mov 4, *12

mov 16, *8

rmov 16, *12

rmov 4, *8

sub 12, four

sub 8, four

jmp swap_loop

swap_end:

add 0, four

jmp read_loop

end:

mov 0, array_ptr

print_loop:

mov 4,*0

jz 4, 0

out 4

add 0, four

jmp print_loop

four:

dw 4

array_ptr:

dw array

zero:

dw 0

array:

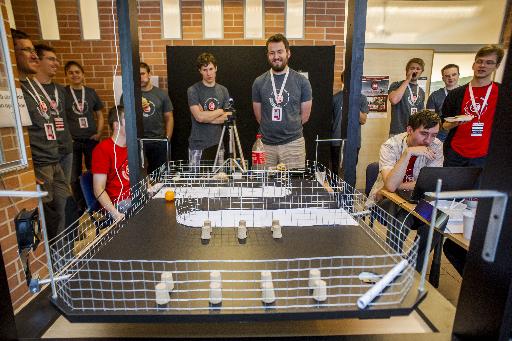

B task was the worst one. It's a task with a physical construction with a video stream of it. You controll the fan that moves around the field with ping-pong ball on it, and your target is to lead the ball into the hole. Your team have 16 attempts: 4 unrated, 4 without obstacles, 8 with them. One ball will cost you around 100 points, it's a difference between 4th, 5th and 6th places.

I think jury expected that someone will use video recognition but no one did. All teams just watched the video and played with a keyboard. And every team did almost the same thing every round, so the score was mostly the matter of luck and agility.

I understand how difficult it is to make such an event and I want to participate in the next ch24, so I hope that jury will look at this writeup and will try to avoid/overcome all mentioned issues in the future.

P.S. And thanks for massage, it's really great feature for such event ;) And here you can find photos

Where is aimfund round?

I should add that problem A had an incorrect sample output and the second sample tests was twice the same test case.

In C, I implemented quicksort (recursion = pain) and the initial, unoptimised implementation got around 20+ points (extrapolated from final scores; it seems the "real score" that depended on the best submission was proportional to the "submission score" that didn't depend on that), 50 points after optimising. We didn't have a problem with that. The real problem is that the optimal solution was to guess (or extract from the jury) that there's just one test for each subtask, spend a gorillion submits trying to guess that test, and then write a program that prints the answer. For C3, it was a gold mine.

How prove solution for task D?

So what's the reason to downvote this post?

Do you think there is some connection between the fact that the finals had to be moved two months forward, and the quality of the problems this year? :)

Probably. One of the e-mails mentioned a "new task writer team" along with the delay.

AFAIK, there are two separate teams: the organization and the task-writing team. The organization changes every year, while the task-writing teams rotates every few years. This was their first year, so I'd expect (and hope) that each of the following years is going to be better. And don't ask me, why they change the whole team. I have no clue and this doesn't make too much sense.

My wild guess is that the reason for moving the date was that the venue was suddenly unavailable (or maybe also lack of sponsors too?). Considering that probably most of the problems were prepared the night before, I don't think that changing the date would have any effect on the quality of the tasks ;)

You are right, there were these two separate, and independent changes this year. There are a few minor details to be refined.

The organization (the operative side) does not fully change every year, but it's true that most of those guys are there only for 1 or 2 years, with some rare exceptions.

The task writer team is a different story. I was part of the previous team; we did our first season as taskwriters in 2010 and did our last in 2014. There were some guys leaving in 2010, and we got some new guys joining in the last 2 years.

Replacing the whole team is obviously not a good idea, and this was not our intention. It was the result of an unlucky combination of circumstances that this has happened.

Also please note that preparing a challenge24 round changed a lot over time: the average finalists of nowdays is more skilled than we were in the mid 2000s. Just compare a 2013 or 2014 EC or finals to the ones before 2006 (they are available in the archives on ch24.org). It literally takes months to prepare the problem set of an EC or finals properly, so it's probalby not realistic to expect task writers to keep doing that for decades — thus the rotation is what we should consider normal.

Just to make things, I'm fully aware that it takes a lot of time to prepare good set of tasks. Especially hardware-related problems (like Ping-Pong or Ball from previous year) are huge time sink. At the same time, some tasks don't. The organizers itself made it clear that some tasks were prepared a night before. And well, one of them wasn't finished on time (Carnival Shootout).

FYI, few years ago I made a set of ~10 optimization tasks for 24h onsite contest (Imagine Cup had Algorithms category long time ago), so I have been on the other side of the barricade too.

My biggest grief was that suddenly whole team was changed. You could literally take the problemsets from 2014 (or 2013) and 2015, shuffle the tasks between those years and you would end with two superb contests. It's bad for the contest's PR if it has very uneven level.

Together with johnasselta and mareksom we took the 4-th place. I wouldn't call task D straightforward but sure, those ADE wasn't enough. Because later contestants had to deal only with 1) hand controlled ping-pong ball, 2) C task and 3) task which started after 12 hours or so. Ping-pong had nothing in common with algorithms or programming at all. C task was ok but scoring and that thing with bin-searching one test (input) was terrible. I don't agree with you ArtDitel about incomplete F statement. There were some minor mistakes but nothing serious. Another thing is that it seems the organizers didn't think how the game will look like with their scoring. Every team was doing the same thing and there weren't many ways to improve it. They said that they would change the rules 3 hours before the end so it is ok. I guess they saw the game became boring (all good strategies were getting 85-100 points I think) and decided to change something. Again the scoring wasn't good in my opinion and game was... strange. The optimal way to adapt to points for deleting was to destroy other guys for 3/4 of game and to build only in the end. Then we could ignore points for destroying. Was it intended?

Dear organizers: I didn't like IRC as a way to communicate with contestants but it's only a preference, nothing important. More serious issues: We didn't know where to sit. Network testing didn't have any task. A had wrong sample test, E had invalid tests and F wasn't working for more than 10 hours. Please, test something before the contest. Don't say "we will start scored rounds at X o'clock" when you don't know it. Don't give contestants more time for not-scored rounds because e.g. you want them to do better. We were said new version of F will start at 6:00 and first round with this version will be in interval 6:00-7:00. Then don't change it to 6:30-7:00. It was like "to the guys who managed to code something working quickly — we are sorry, we'll give extra time to all teams" (it's not a quote, I made it up).

I always enjoy 24h contests and I hope you will improve something in the next year. And consider making more tasks. Then nobody will complain about stupid task with ping-pong because it will be only a part of a contest, not something deciding about places so much. Btw. B (yes, ping-pong) and C were great ideas for tasks. I was doing D and F during the contest. I enjoyed the first one (though I made many mistakes there) and the second one gave me some fun too. Thanks for the contest!

Talking of B, I wouldn't say no team used video recognition. I actually was augmenting some stuff on top of the picture (though the only thing I had to recognize was the ball to provide aiming guidance), and I wrote UI to control everything very precisely with mouse. The problem was that sending 5 commands per second made the fan go completely crazy.

I want to know the author's or any good solutions to C3. We noticed that there is some fractal at [-200..200]x[-200..200] square, but also we noticed that not all of 'X'-s are in this square. For example, a[2^24][2^24] = 'X'. We tried to optimize the author's solution by constant, but gained 0 real score.

If all submitted solutions depend on very, very weak tests, then it is really very bad task.

I posted our solutions for C in my write-up. You can blame the relative scoring for having small scores.

Using such high values for C3 doesn't make any sense. Integers will overflow during the computations, so this is a very weak argument. This is original C3 binary converted to C++ (made by meret)

In Challenge24 tests are always given. Solving them by hand is a perfectly fine method, so tailoring your solution towards the used tests (even if they are not explicitly given) is fine in my eyes. What wasn't fine is that organizers gave wrong impression that there multiple tests for each subproblem.

Using such high values for C3 doesn't make any sense? It is not obviously. I thought that if I had to optimize given solution, then after optimization at any correct input it should work exactly how it worked before. Nothing was said about "high input values" in problem statement.

Yes, I agree, that solving task by submitting solution dozens of times is good method, but this time it was hard to guess that it will work.

What is correct input is not strictly defined. Essentially, you got the program that solves the problem, but you don't know what the correct input format is. You have to make an educated guess for the limits. Seeing the provided program it's clear that it doesn't work properly for very big inputs. Using this information we can deduce that there won't be big inputs.

Of course, I'm making an assumption that problem setters designed their problem in line with everything else.

If you were familiar with HELLINET night competition, you wouldn't post this! the comptition started at 23:00 (in the morning we had another contest!). Firstly, we should play with WoW to get the idea what it is. Our task was to write an console application that have a winning strategy for that game (trust me, it was more like Field Runners than WoW)! The competition started and the judge wasn't ready! we were coding for nothing for more than 2 hours, then the judge was ready to use, but there was a problem: it can be used only on WINDOWS! therefore, programmers like me who have a laptop with OS other than Windows, couldn't code on their own laptop. after that, the server was changing the towers and other stuff in the game hourly so that we should change our strategy every hour. Apart from that, the server was gone down several times and we couldn't submit any codes. Actually, I think they were developing the server during the contest! The competition what continued till 5-6 AM in the morning and the coders didn't sleep be drinking coffee! Finally we had a short nap and the programs were run on the server for the contest. Although one of my friends(ITDOI) were coded with one of the best algorithms he failed because of the changing rules before the final judgement! Oh, another thing is we ran out of coffee in the beginning. there wasn't anything to eat or drink other water. The contest was awful but we had a lot of fun there with dkjsfkhg, BARF and Batman :D

UPD: My mistake, it was Warcraft, not WoW! but it was that bad I've written

Hellinet night tournament wasn't official contest my fake freind :-)

BTW it had it's own mistakes, it was like field runner but more like what we played first , and that wasn't WoW (World of Warcraft) but was one of Warcraft maps that was exactly what we should had done back then! (I wonder how can't you make difference between WoW and Warcraft) :-)

All of your problem is difference between Warcraft and WoW?

it wasn't official but still it was a competition!