Hello Codeforces,

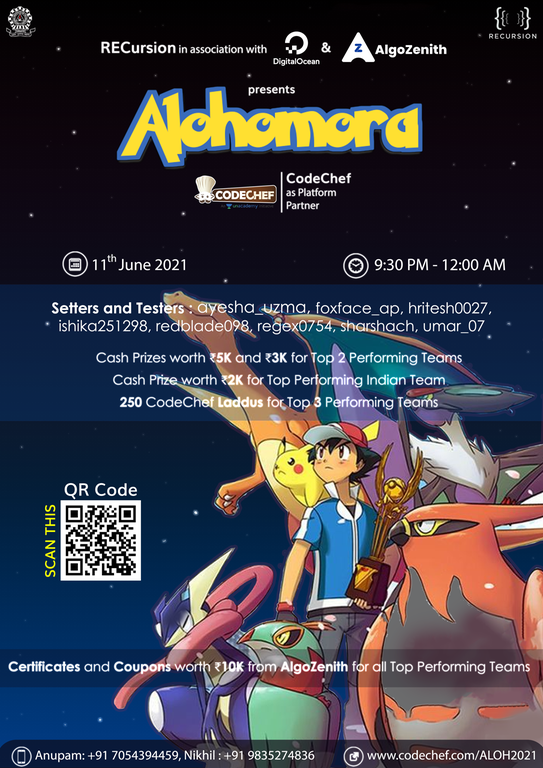

RECursion, the coding community of NIT Durgapur, is organizing an ACM-ICPC style-based contest ALOHOMORA. The contest will be held on 11th June at 21:30 IST.

The contest will be held on the CodeChef platform. There will be 8 problems which are to be solved in 2 hours and 30 minutes.

Participants need to register themselves in teams of 3 (maximum).

Link : https://www.codechef.com/ALOH2021

Prizes:

Cash Prizes worth ₹5K and ₹3K for Top 2 Performing Teams

Cash Prize worth ₹2K for Top Performing Indian Team

250 CodeChef Laddus for Top 3 Performing Teams

Exciting Goodies from Digital Ocean for Top 3 Performing Teams from NIT Durgapur

100 CodeChef Laddus for Top Performing Team from NIT Durgapur

Certificates and Coupons worth ₹10K from AlgoZenith for all Top Performing Teams

For further information, contact:

Nikhil Vashistha: +91 9835274836

Anupam Singh: +91 7054394459

Auto comment: topic has been updated by regex0754 (previous revision, new revision, compare).

Looking forward to it!

Excited for this!

Looking forward to unlocking some good problems!

Alohomora...

Does registration close after the contest begins?

Looking forward to it !!!

You all will Enjoy the round

As evident from the previous Alohomoras, the contest should be amazing !

expect some great problems from this contest . Good luck to all .

"Certificates and Coupons worth ₹10K from AlgoZenith for all Top Performing Teams"... thats mean top performing 3 teams?

Yes, top $$$3$$$ teams will be given certificates and coupons from AlgoZenith

Reminder : Contest starts in 1 hour 30 minutes. On the contests’ page, toggle the “Show all contests” button to see the link or directly access it from here . GLHF!

Reminder : Contest starts in $$$10$$$ minutes.

Thanks for the contest!

How do you solve BLAZE and TATAKAI? For BLAZE we think the number of possible strings is about N, but we haven't gotten beyond that.

how to solve team rocket and jiggly puff. Help Us...god will help u :D

We didn't do Team Rocket, but Jiggly Puff was just perfect bipartite matching: add a bidirected edge between an element (its index) and its divisors (<= N). And the problem is then reduced to finding a perfect matching on this graph (at least one exists as per the problem statement).

what is the time complexity?

Team Rocket: You can maintain two range sum trees, one for each dimension. Then, notice that all the information you need is the sum for a suffix of points considering the X coordinate and the prefix of points considering the Y coordinate.

Jiggly Puff: Create a bipartite graph with $$$2*N$$$ nodes: nodes on one side representing the indices and the other representing the values. Add edge from value $$$V$$$ to index $$$i$$$ if $$$i$$$ divides $$$V$$$. Run a bipartite matching, is guaranteed to be complete. Print the matches.

Can you please explain your Team Rocket solution in more detail!!

Consider the center as (X,Y) and the whole grid as -5e5 to 5e5 for both x and y axis.

You can get the underlined values in log N using BIT or similar DS.

Or simply ABCD — (AC) — (CD). ABCD is sum of all values, no need to query AB and BD.

Rocket is simpler though, we need to maintain two Fenwick trees — one for $$$X$$$ and one for $$$Y$$$, and note that the required difference is the difference between sum of values at locations with $$$x \ge X$$$ and the sum of values at locations with $$$y < Y$$$.

How did 2D segment tree pass? Was Test Cases weak?

Not 2d segtree but rather 2 1d segtrees. One based on x coordinates the other based on y coordinates

For rocket, go to https://judge.yosupo.jp/problem/point_add_rectangle_sum and find a submission that makes sense to you

We thought of BLAZE via suffix automaton and small to large merging. Think it like this, for a node in automaton, it corresponds to a set of continuous suffixes with set of endpos. Also for a unique string from that set, the power is achieved from closest 2 indices in it's endpos set. So we can maintain the closest pair of indices using small to large merging on the suffix link tree of the automaton. And from there, it's basic math.

I think BLAZA can be done this way (I might be wrong), I ran out of time implementing it.

First we build suffix automaton for the given string and get the tree of links. For each node (state) we can maintain index where that suffix ends and occurences of the string at that state.

Also for each node of the tree, we have to find the two closest indices considering all nodes in its subtree (which can be done using DSU on trees).

Finally, for each node we know the length of the 2 closest occurences, the lengths of strings that appear at that node and number of times it appears in string.

Yes that's correct, I implemented this approach and got AC. But I still wonder how $$$O(Nlog^{2}N)$$$ was intended to pass in 1s. I tried a lot to optimize this approach but ended up trying luck and it passed lol.

How to solve pikachu and stones ?

First, notice that if the peak is to be at an index $$$i$$$, each element to the left and to the right must be strictly decreasing from $$$i$$$. We can greedily select two adjacent elements, say $$$a[j]$$$ and $$$a[j + 1]$$$, and make $$$a[j]\ <\ a[j\ +\ 1]$$$ (given $$$j$$$ is to the left of $$$i$$$): if $$$a[j]$$$ is already less than $$$a[j\ +\ 1]$$$, you need $$$0$$$ operations, otherwise you need to make $$$a[j\ +\ 1]\ =\ a[j]\ +\ 1$$$ operations, which requires $$$a[j]\ +\ 1\ -\ a[j\ +\ 1]$$$. But since, we cannot traverse all the way in the left and right, we will keep a prefix sum array that tells you the number of operations you need to make a prefix strictly increasing; similarly we will keep a suffix sum array that tells you the number of operations you need to make a suffix strictly decreasing. Now, to make index i a peak, the total number of operations is just $$$min(p[i]\ +\ s[i\ +\ 1]$$$, $$$p[i\ -\ 1]\ +\ s[i])$$$. The minimum over all indices is the answer.

I think $$$\min(p[i] + s[i + 1], p[i - 1] + s[i])$$$ won't work for cases like 1 2 3 3 2 1.

Is there any info about prize distribution?

We will contact you as soon as possible.

How to solve TATAKAI? Any idea or possible approach ?

It can be solved using centroid decomposition and some hardcoding but we don't have enough time to finish the solution.

Yeah I thought of centroid decomposition, but nothing after that. Can you please describe your solution in slight detail?

Could you please elaborate? I'm not quite sure how the string comparisons are to be made quickly.

Its wrong

I think here it will be difficult to maintain simple paths.

I didn't take the round, but is there any reason this solution doesn't work?

Let a state consist of the current vertex and the last vertex on the path (or $$$-1$$$, if no such vertex exists). Note that there are $$$O(N)$$$ possible states. Then, using a BBST, we maintain a list of processed states in increasing lexicographic order of their strings. To insert a new state, we take the minimal letter on any outgoing edge and, if multiple edges share that letter, we take the edge to the lexicographically minimal string. A naive implementation of this approach would be $$$O(N)$$$ per state in the worst case, but I believe it can be implemented efficiently using rerooting DP.

From here, we know which edge we'll take out of each state. The rest of the problem can be solved using binary lifting.

We wasted a lot of time on the problem in contest, and the key issue we found is: how do we find the "lexicographically minimum string"? If there are two very similar strings then how can we tell? Surely you can't store the string itself...

This is what the BBST does: if I’m not mistaken, we can traverse it to find the position of each candidate string, and we can pick the one that appears first.

I think the question is more "how do we make string comparisons at all". The initial "encoding" hash doesn't seem to help us make comparisons. I suppose there's some theory that we just haven't read here; author uses some kind of hash.

By the way, there is a more fundamental problem with your solution (we were considering similar solutions). Say you're at node $$$v$$$ with distance $$$x$$$ left, and in a different query you're at node $$$v$$$ with distance $$$y$$$ left. It's not obvious, but the next edge you go to isn't necessarily the same in both of these situations. The reason is that you won't be able to form the distance at all if you take some edges, even if lexicographically they are best.

Ahh, I see—the latter point is an issue (and I believe is fairly difficult to resolve, and any fix would involve some very messy implementation), nice catch!

String comparisons, FWIW, are handled by storing the first letter of each string and the BBST node corresponding to the remainder of the string (given that the rest of the string will be the string starting at some other state, under the assumption I made before). We can then compare using the first letter and, if those are the same, the positions of the two nodes can be compared. This is sufficient to handle comparisons necessary when inserting into the tree, in a hypothetical world in which the length constraint did not make things more difficult to work with.

We will solve all the queries offline. The topics which we will require are centroid decomposition, rolling hash, lca and binary lifting. Lets say we have a node $$$u$$$ and there is some query whose starting node is $$$u$$$. Then we can check all the paths(In given tree) whose one end is $$$u$$$ using the ancestors of $$$u$$$ in centroid tree(These ancestors will be internal nodes of paths in given tree). This can be done by maintaining an array for each path_length (Of paths in given tree) from current root (current root is root of subtree while traversing a subtree of decomposed tree) which will have least lexicographic "path" and we do updates everytime we go to a node.

After each update we need least lexicographic answer possible. So for update, we use binary search on hashes (which we can store in $$$O(N)$$$ and query them in $$$O(1)$$$ time) and binary lifting to find the first node / character between two candidate "path" to get the first mismatch and we compare them accordingly.

The final time complexity is $$$O((N + Q) * log^3N)$$$ in which $$$O(N*logN)$$$ is from travelling each subtree of centroid tree, $$$O(Q*logN)$$$ is from getting the final path for each query. Also, every time we perform an update it takes $$$O(log^2N)$$$ (Because of my implementation).

If any one has other approach / solution then do mention them here. Hope every one liked the problemset :P

solution

This much code... Huh. Anyways problemset was good.

(When) will the problems be available for practice?

It should have been a 3 hours contest.

When will the editorial be out?