| # | User | Rating |

|---|---|---|

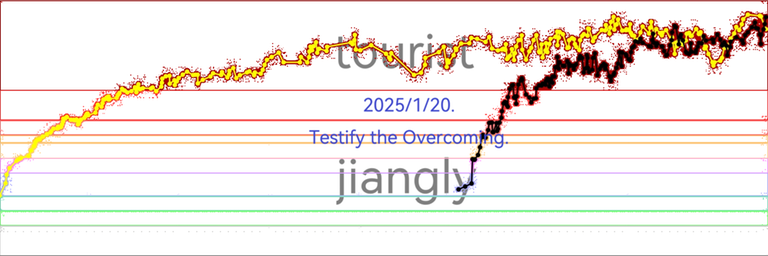

| 1 | jiangly | 3846 |

| 2 | tourist | 3799 |

| 3 | orzdevinwang | 3706 |

| 4 | jqdai0815 | 3682 |

| 5 | ksun48 | 3590 |

| 6 | Ormlis | 3533 |

| 7 | Benq | 3468 |

| 8 | Radewoosh | 3463 |

| 9 | ecnerwala | 3451 |

| 9 | Um_nik | 3451 |

| # | User | Contrib. |

|---|---|---|

| 1 | cry | 165 |

| 2 | -is-this-fft- | 161 |

| 3 | Qingyu | 160 |

| 4 | atcoder_official | 156 |

| 4 | Dominater069 | 156 |

| 6 | adamant | 154 |

| 7 | djm03178 | 151 |

| 8 | luogu_official | 149 |

| 9 | Um_nik | 148 |

| 10 | awoo | 147 |

Well, codeforcers, it's quite a while since I visited here last time.

If you read this blog, you might know that, a few days ago, NOIP 2024 took place. On Luogu, the difficulties of the four problems are defined as Blue, Green, Purple and Purple. However, when I saw that problem A is blue, I became confused and couldn't understand why it's blue. The forum of this problem in Luogu is CHAOTIC. What I mean is, there are people consider this problem as a problem even a newbie (yes, codeforces newbie) can solve without difficulty, while others say this problem has a difficulty similar to problems in Provincial Team Selection. I'd like to ask you, how difficult is this problem? Here comes the statement:

An integer n (1≤n≤105) and four 01-strings with a length of n are given, I will call them a,b,c,d below.

Now, you want to let string a and b be as similar as possible. So, you can perform the following operations:

- Operation 1: choose an integer i (1≤i<n), which satisfies ci=ci+1=1, then swap ai and ai+1.

- Operation 2: choose an integer i (1≤i<n), which satisfies di=di+1=1, then swap bi and bi+1.

After performing the operations above for arbitrary times, possibly 0 times, print the following value:

And here's my code which is able to pass all the samples Luogu given:

#include <iostream>

#include <string>

using namespace std;

const int N = 1e5 + 10;

int n, res, ta[2], tb[2];

string a, b, c, d;

void init_global()

{

}

void init_local()

{

cin >> n >> a >> b >> c >> d;

ta[0] = ta[1] = tb[0] = tb[1] = res = 0;

}

void run()

{

for (int i = 0; i < n and c[i] == '1'; i++)

{

ta[a[i] - '0']++;

}

for (int i = 0; i < n and d[i] == '1'; i++)

{

tb[b[i] - '0']++;

}

for (int i = 0; i < n; i++)

{

if (!ta[0] and !ta[1])

{

if (c[i] == '1')

{

for (int j = i; j < n and c[j] == '1'; j++)

{

ta[a[j] - '0']++;

}

}

else

ta[a[i] - '0']++;

}

if (!tb[0] and !tb[1])

{

if (d[i] == '1')

{

for (int j = i; j < n and d[j] == '1'; j++)

{

tb[b[j] - '0']++;

}

}

else

tb[b[i] - '0']++;

}

if (ta[0] and tb[0])

{

res++, ta[0]--, tb[0]--;

continue;

}

if (ta[1] and tb[1])

{

res++, ta[1]--, tb[1]--;

continue;

}

if (ta[0])

{

ta[0]--, tb[1]--;

continue;

}

ta[1]--, tb[0]--;

}

printf("%d\n", res);

}

int main()

{

int T = 1;

scanf("%d", &T);

init_global();

while (T--)

{

init_local();

run();

}

}Please write how difficult do you think of this problem in comment, and a Codeforces difficulty value if you can. Thanks!

UPD: The code I shown above is able to pass all the samples. The submission is here.

1. Up to the Clouds

x\oplus x=0

x\oplus x\oplus x=x

The answer depends on parity.

If m is even, then the result of the formula is always 0. Otherwise, it can be any positive integer, because all the elements in the sequence a is positive.

#include <iostream>

using namespace std;

const int N = 1e5 + 10;

int n, m;

void run()

{

scanf("%d%d", &n, &m);

puts((m & 1) ^ (!n) ? "Yes" : "No");

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}2. Song of Silent River

Lexicographically smallest.

If you can keep the current character a, then do it.

Since a is the lexicographically smallest character, then it's always optimal to insert a instead of others.

Use # in the string b greedily.

#include <iostream>

#include <string>

using namespace std;

const int N = 1e5 + 10;

int n, m, cts, idx;

string s, t;

int main()

{

cin >> n >> m >> s >> t;

for (int i = 0; i < n; i++)

{

cts += (t[i] == '#');

}

for (auto &i : s)

{

if (i != '#')

continue;

i = idx + 'a';

if (cts >= 25 - idx)

{

cts -= 25 - idx;

idx = 0;

continue;

}

idx++;

idx = (idx == 26 ? 0 : idx);

}

cout << s << '\n';

}3. Drifting Clouds' Rhythm

Denote c_{i,x} as the total number moments when exactly x roses are blooming when or before the moment i.

If we have a virtual rose which blooms in the range [1,i], then how to denote the number the answer will increase when we move that flower's blooming time to an infinitely faraway place?

The answer of the question raised in hint 1 is \sum_{j=2}^\infty c_{i,j}+c_{i,2}.

Prefix sum.

We can calculate the sequence c at each turning point. We call a moment a turning point when a rose blooms or fades at this moment.

Then, calculate value in hint 2 for each turning point.

It can be proved that moving [x,y] away is equivalent to move [1,y] away then move [1,x-1] back.

So use prefix sum to calculate answer for each rose.

#include <iostream>

#include <algorithm>

using namespace std;

const int N = 2e5 + 10;

using ll = long long;

int n, m, x, idx, cur;

ll mx, res[4], p2[N];

using pii = pair<int, int>;

pii dst[N << 2];

bool mp[N];

int main()

{

scanf("%d%d", &n, &m);

for (int i = 1; i <= n; i++)

{

scanf("%d", &x);

dst[++idx] = {x, i};

dst[++idx] = {x + m, i};

}

sort(dst + 1, dst + idx + 1);

for (int i = 1; i <= idx; i++)

{

auto &[a, b] = dst[i];

res[min(cur, 3)] += a - dst[i - 1].first;

if (!mp[b])

{

cur++;

mp[b] = true;

p2[b] = res[2] * 2 + res[3];

continue;

}

mx = max(mx, res[2] * 2 + res[3] - p2[b]);

cur--;

}

printf("%lld\n", res[1] + mx);

}1. Strong Password

It's optimal to make adjacent characters different.

If two adjacent characters are same, then they only add the total time by 1 seconds. If we insert a different character between them, the 1 will turn into two 2 s, which add the total time by 2 seconds. Since you can only insert 1 character in it, it's the optimal insertion method.

If that same adjacent pair doesn't exist, we can still add a different character between an arbitrary pair, then it will add the total time by 2 seconds.

Time complexity: O(n).

# include <iostream>

# include <string>

using namespace std;

const int N = 1e5 + 10;

string s;

int n, tp;

void run()

{

cin >> s;

n = s.size();

for (int i = 0; i < n - 1; i++)

{

if (s[i] == s[i + 1])

{

tp = 0;

while (s[i] == tp + 'a' or s[i + 1] == tp + 'a')

tp++;

s.insert(s.begin() + i + 1, tp + 'a');

break;

}

}

if (n == s.size())

{

if (s.back() == 'z')

s.push_back('a');

else

s.push_back('z');

}

cout << s << '\n';

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}2. Make Three Regions

The given grid contains at most 1 connected region.

There are only 2 rows in this grid.

Imagine a trap.

Since there are only 2 rows in the grid, so all the cell unblocked will have at most 3 adjacent unblocked cells.

In order to make 3 connected components, we should seperate these 3 adjacent unblocked cells into different connected components. So how to do that?

Imagine a "trap" like this:

...

x.xWe can see that if we block the middle cell on the upper row, it will separate into 3 connected components like this:

.x.

x.xIt's the only way to separate legally.

Time complexity: O(n).

# include <iostream>

using namespace std;

const int N = 2e5 + 10;

int n, res;

char ch[2][N];

void run()

{

cin >> n;

cin >> ch[0];

cin >> ch[1];

res = 0;

for (int j = 0; j < 2; j++)

{

for (int i = 1; i < n - 1; i++)

{

if (ch[j][i] == '.')

{

res += (ch[j ^ 1][i - 1] == 'x' and

ch[j ^ 1][i + 1] == 'x' and

ch[j ^ 1][i] == '.' and

ch[j][i - 1] == '.' and

ch[j][i + 1] == '.');

}

}

}

printf("%d\n", res);

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}3. Even positions

It's OK to construct it greedily.

For a RBS s, we define its layer value l as this:

"Layer" stands for "the layer number of brackets pair".

And its prefix value p is this:

Then, it's required to satisfy p_n=0 and \min(p)=0.

If \min(p)<0, it'll no longer be a RBS.

Then, we define the require value r as this:

Note that this s may contains "_".

A legal construction of s satisfies p_i\ge r_i.

Then, for each position i, if inserting an ")" may make p_i<r_i, then insert an "(" instead.

It can be proved that this is the optimal construction method.

Time complexity: O(n).

Fun fact: I got 2 runtime errors during the contest because I set the array size to 10^5 while the problem requires 2\times 10^5.

# include <iostream>

# include <string>

# include <stack>

using namespace std;

const int N = 2e5 + 10;

string s;

int n, ts[N];

stack<int> stk;

using ll = long long;

ll res;

void run()

{

cin >> n >> s;

ts[n] = res = 0;

for (int i = n - 1; ~i; i--)

{

if (s[i] != ')')

{

ts[i] = max(0, ts[i + 1] - 1);

}

else

{

ts[i] = ts[i + 1] + 1;

}

}

for (int i = 0, tmp = 0; i < n; i++)

{

if (s[i] == '(')

{

tmp++;

stk.push(i);

continue;

}

if (s[i] == ')')

{

tmp--;

res += i - stk.top();

stk.pop();

continue;

}

if (tmp - 1 < ts[i + 1] or !stk.size())

{

tmp++;

s[i] = '(';

stk.push(i);

continue;

}

s[i] = ')';

tmp--;

res += i - stk.top();

stk.pop();

}

printf("%lld\n", res);

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}4. Maximize the root

After each operation, the values on all vertices should be non-negative.

Maximize the minimum.

With the formula in hint 3, we can quickly realize that we should make \min_{i=2}^n a_i as big as possible. So how to do that?

Well, the operation that the problem provides is able to add a vertex by subtracting the rest of its subtree. So, in order to maximize the minimum, we can simply make them closer.

If the value of a vertex is already higher than the minimum value of its subtree, we can't get them closer.

Apply a depth-first search to do that.

Time complexity: O(n).

# include <iostream>

# include <vector>

using namespace std;

const int N = 2e5 + 10;

int n, a[N], cont[N];

vector<int> road[N];

void dfs(int x, int f)

{

cont[x] = 0x3f3f3f3f;

for (auto &i : road[x])

{

if (i == f)

continue;

dfs(i, x);

cont[x] = min(cont[x], cont[i]);

}

if (x == 1)

return;

if (cont[x] == 0x3f3f3f3f)

cont[x] = a[x];

else if (cont[x] > a[x])

{

cont[x] = (cont[x] + a[x]) / 2;

}

}

void run()

{

scanf("%d", &n);

for (int i = 1; i <= n; i++)

{

scanf("%d", a + i);

road[i].clear();

}

for (int i = 2, x; i <= n; i++)

{

scanf("%d", &x);

road[x].push_back(i);

}

dfs(1, 0);

printf("%d\n", a[1] + cont[1]);

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}5. Level Up

What will happen when we increase k?

The number of the monsters fleeing won't be higher. Why?

Because, a higher k means the lower expectation level.

Binary search.

With the conclusion in hint 2, we can calculate the Firmness requirement f which satisfies when k\ge f_i, the i-th monster always fights with Monocarp.

If i-th monster fight with Monocarp with a parameter of k, then it must satisfy that

Because when Monocarp already fought a_ik or more monsters before, then his level will be higher than a_i, and the i-th monster will flee.

The formula above is easy to calculate with a BIT.

After calculating the sequence f, we can answer all the queries in a time complexity of O(1).

Time complexity: O(n\log n\log (\max a_i)+q).

Fun fact: I applied an unnecessary sort on the queries. However, it still has a good speed.

# include <iostream>

# include <vector>

# include <tuple>

# include <algorithm>

using namespace std;

const int N = 2e5 + 10;

int a[N], n, q, tr[N], idx = 1, l, r, mid;

vector<int> dst[N];

inline void update(int x, int v)

{

while (x < N)

{

tr[x] += v;

x += (x & -x);

}

}

inline int query(int x)

{

int res = 0;

while (x)

res += tr[x], x -= (x & -x);

return res;

}

inline bool check(int x, int v)

{

return 1ll * a[x] * v <= query(v);

}

using t3i = tuple<int, int, int>;

t3i qry[N];

bool res[N];

int main()

{

scanf("%d%d", &n, &q);

for (int i = 1; i <= n; i++)

{

scanf("%d", a + i);

}

for (int i = 1; i <= q; i++)

{

auto &[x, k, id] = qry[i];

scanf("%d%d", &x, &k);

id = i;

}

sort(qry + 1, qry + q + 1);

for (int i = 1; i <= q; i++)

{

auto &[x, k, id] = qry[i];

while (idx < x)

{

l = 1, r = n;

while (l < r)

{

mid = (l + r) >> 1;

if (check(idx, mid))

l = mid + 1;

else

r = mid;

}

update(l, 1);

idx++;

}

res[id] = !check(x, k);

}

for (int i = 1; i <= q; i++)

{

puts(res[i] ? "YES" : "NO");

}

}#include <iostream>

#include <tuple>

using namespace std;

const int N = 2e5 + 10;

int a[N], n, q, tr[N], idx = 1, l, r, mid, req[N];

inline void update(int x, int v)

{

while (x < N)

{

tr[x] += v;

x += (x & -x);

}

}

inline int query(int x)

{

int res = 0;

while (x)

res += tr[x], x -= (x & -x);

return res;

}

inline bool check(int x, int v)

{

return 1ll * a[x] * v <= query(v);

}

int main()

{

scanf("%d%d", &n, &q);

for (int i = 1; i <= n; i++)

{

scanf("%d", a + i);

}

for (int i = 1; i <= n; i++)

{

l = 1, r = n;

while (l < r)

{

mid = (l + r) >> 1;

if (check(i, mid))

l = mid + 1;

else

r = mid;

}

update(l, 1);

req[i] = l;

}

for (int i = 1, x, k; i <= q; i++)

{

scanf("%d%d", &x, &k);

puts(k < req[x] ? "NO" : "YES");

}

}UPD: The full version is here.

1. Strong Password

It's obvious that the best insertion method is to find two same adjacent characters and add a difference to it, and that increases the time by 3.

If there isn't a pair like that, we can simply add a character different to the back of the string, to increase the time by 2.

2. Make Three Regions

Since there is at most 1 connected component in the input graph, and there are only 2 rows, so a legal construction only acts like this:

.0.

x.xor you can flip it by the x-axis.

3. Even Positions

Define n is size of s, s' is s after restoration, and

Then, for all integers i in range [1,n], p_i shouldn't less than r_i.

So, just greedily construct s' by checking each r_i in time.

4. Maximize the root

The overall strategy is able to be called "maximize the minimum". Apply depth-first search on the tree, and when you finish all "maximize the minimum" for vertex x's childs, if a_x is lower than all a_i where i is in x's subtree and x\neq i, then set a_x to the floor average of a_x and \min(a_i). Otherwise, don't perform operations.

Answer is a_1+\min_{i=2}^n a_i.

5. Level Up

Observed that for each i, there exists a constant c_i that i-th monster always fight with Monocarp when k\ge c_i.

Apply binary search with a BIT to find c_i, which has a time complexity of O(n\log^2 (2\times 10^5)), then we can answer each query in constant time.

Abstract

After I participated in Pinely Round 4 yesterday, my rating dropped down a lot.

BUT, I learnt something useful.

1. Check your initialization — AND Reconstruction

After I solved A in a minute and saw the statement of B, I quickly realized that there's an efficient construction which is simply set a_i=b_i|b_{i-1}. The code is below.

#include <iostream>

using namespace std;

const int N = 1e5 + 10;

int n, a[N], b[N];

void run()

{

scanf("%d", &n);

for (int i = 1; i < n; i++)

{

scanf("%d", b + i);

}

for (int i = 1; i <= n; i++)

{

a[i] = (b[i] | b[i - 1]);

}

for (int i = 1; i < n; i++)

{

// printf("%d %d\n", (a[i] & a[i + 1]), b[i]);

if ((a[i] & a[i + 1]) != b[i])

return puts("-1"), void();

}

for (int i = 1; i <= n; i++)

{

printf("%d%c", a[i], " \n"[i == n]);

}

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}Can you recognize what's wrong with the code?

In fact, we get b_1 to b_{n-1} from input. However, the code above used b_n to calculate a_n, and it's not given. If the former n is bigger than the current n, the b_n won't be zero, and the program will output a wrong a_n. To resolve this, just set b_n to zero before the construction.

2. It's never guaranteed that former problems are easier than others — Absolute Zero

40 minutes after I submitted the second wrong submission of B, I gave up and came to check C.

And, literally the moment I understood the problem statement, I came up with an efficient solution.

In each of the k operations, sort a, then set x=\lceil a_1+a_n\rceil. If the legal operation sequence exists, my method always reduce \max(a)-\min(a) for a half.

So I quickly implemented my method successfully, and got accepted. However, it was already an hour from the beginning, so I lost a lot of points.

3. Think about the probability that you're rickrolled by the problem when you struggled solving it — Prime XOR Coloring

From I solved C to the end of the contest, I struggled solving D because I believed that it was easier to solve D than to solve B (because I didn't find that silly bug). I tried to find a pattern what a legal construction has, but I failed. Because I tried to make every color as small as possible.

However, I forgot that it's not necessary to make every color the smallest. Making the number of colors smallest is enough. And in the editorial, the proposer told us, "Why not try to make all \operatorname{xor} values of the positions with same color an composite number?"

In fact, the sample given in the problem statements is already a hint. It hints us to think about 4. As we know, (4)_{10}=(100)_2. There's only one 1 in its binary expression. If we make sure that 2 lowest bits of two different positions with a same color are always the same, then their \operatorname{xor} value is always divisible by 4, which is a composite number.

Then, for n\ge 6, we can simply set c_i=i\bmod 4+1.

What a rickrolling problem, but I like it!

4. Maybe the answer resides in the boundary condition — Coloring Game

Since I spent all the rest of the time solving D, I only took a look at E and had no idea.

The statement says Alice chooses two different colors each time. So, in order to decrease the probability of Bob winning, she can only choose two colors all the time.

And in this worst case, Bob is possible to win only when the graph is a bipartite graph, which is able to be colored by only two colors.

So the instruction to win is obvious. If the graph is a bipartite graph, choose Bob, since he can always win with at least 2 colors. Otherwise, choose Alice, since only a bipartite graph can be colored by only two colors, and it's impossible for Bob to find a legal coloring.

5. Range queries may not lead to a range data structure such as Segment Tree — Triangle Formation

When I took my first look at this problem, I thought about segment tree. However, it's unnecessary in this problem because the densiest sequence ("densiest" means the smallest maximum value) with no non-degenerate triangle formable is the fibonacci sequence. If you do a simple implementation, you'll discover that the 45-th term of that sequence already exceeds 10^9. So, under the constraint, a subsequence of a with a size not less than 45 always generates a non-degenerate triangle.

But the problem asks us to make 2 non-degenerate triangle. How can we do that? In fact, we can simply add 3 more numbers into that sequence. because after we extract one of the non-degenerate triangles, there are still 45 elements in it, we can still extract another one.

For those subsequences with less than 45 elements? Well, since it's obviously easier to get a non-degenerate triangle with closer elements if we sort that sequence, we can forcefully search all the possible combinations in subintervals in the sorted subsequence with a size of 6, or all the consecutive subintervals with no intersections and a size of 3.

But, if you do that without optimizations, you will get a TLE.

So how to optimize?

Add break to your code, as many as you can.

#include <iostream>

#include <algorithm>

#include <vector>

using namespace std;

const int N = 1e5 + 10, E = 1e9, idx = 48;

int prec[N], n, q, a[N], b[N], rs;

bool fl;

vector<int> vec;

bool check(int p, int x, int y, int z)

{

vec.clear();

for (int i = 0; i < 6; i++)

{

if (i != x and i != y and i != z)

vec.push_back(p + i);

}

return b[p + x] + b[p + y] > b[p + z] and b[vec[0]] + b[vec[1]] > b[vec[2]];

}

void run()

{

scanf("%d%d", &n, &q);

for (int i = 1; i <= n; i++)

{

scanf("%d", a + i);

}

for (int t = 1, x, y; t <= q; t++)

{

scanf("%d%d", &x, &y);

if (y - x + 1 >= idx)

{

puts("YES");

continue;

}

for (int j = x; j <= y; j++)

{

b[j - x] = a[j];

}

sort(b, b + y - x + 1);

fl = false;

for (int i = 0; i < y - x - 4; i++)

{

for (int j = 0; j < 6; j++)

{

for (int k = j + 1; k < 6; k++)

{

for (int l = k + 1; l < 6; l++)

{

fl |= check(i, j, k, l);

if (fl or b[i + j] + b[i + k] <= b[i + l])

break;

}

if (fl)

break;

}

if (fl)

break;

}

if (fl)

break;

}

if (fl)

{

puts("YES");

continue;

}

rs = 0;

for (int j = 2; j < y - x + 1; j++)

{

if (b[j] < b[j - 1] + b[j - 2])

rs++, j += 2;

}

puts(rs > 1 ? "YES" : "NO");

}

}

int main()

{

int T = 1;

// scanf("%d", &T);

while (T--)

run();

}0. Feeling

Welcome to the easiest Div.1 + Div.2 ever.

Got accepted on ABCDE.

And there's also a version in Chinese here.

1. Diverse Game

Given a permutation with a size of nm, construct another permutation with a size of nm satisfies that it's different to the previous permutation in each position.

a_i=a_i\bmod nm+1. The time complexity is O(nm).

#include <iostream>

using namespace std;

const int N = 5e4 + 10;

int n, m;

void run()

{

scanf("%d%d", &n, &m);

if (n == 1 and m == 1)

{

scanf("%*d");

puts("-1");

return;

}

for (int i = 1, x; i <= n; i++)

{

for (int j = 1; j <= m; j++)

{

scanf("%d", &x);

printf("%d%c", x % (n * m) + 1, " \n"[j == m]);

}

}

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}2. Fun Game

Given two binary sequences a and b,unlimited operation to make from a_l to a_r simultaneously xor a_1 to a_{r-l+1}. Determine if it's able to turn a into b.

Because I was watching Zenless Zone Zero videos on Bilibili, I suddenly realized that, it's able to consider the prefix zeros in a and b as "hollow". Because, under the constriants of the problem, regardless how many operations you do, the size of their "hollow" won't decrease.

With only one 1, we can freely modify the following zeros and ones.

Only one line of code to solve this problem. The time complexity is O(n).

#include <iostream>

#include <string>

using namespace std;

const int N = 1e5 + 10;

int n;

string s, t;

void run()

{

cin >> n >> s >> t;

puts(s.find('1') <= t.find('1') ? "YES" : "NO");

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}3. Hungry Games

Too lazy to write the statement again.

Answer = total number of possible ranges — ranges with final toxicity of 0.

Looks like topological sort.

Define d_i as the number of left borders j when set i as the right border satisfying the final toxicity of the range [j,i] equals zero.

When enumerating i, find the first t safisfies \sum_{j=i}^t a_j>x, add d_i+1 to d_t. because, d_i ranges satisfying the final toxicity equals zero, and the range [i,t] also makes zero, it's able to combine these two ranges together, that the final toxicity is still zero.

The time complexity is O(n).

#include <iostream>

#include <cstring>

using namespace std;

const int N = 2e5 + 10;

using ll = long long;

int n, k, r;

ll a[N], dp[N], cur, res;

void run()

{

scanf("%d%d", &n, &k);

for (int i = 1; i <= n; i++)

{

scanf("%lld", a + i);

}

memset(dp + 2, 0, n << 3);

cur = 0;

r = 1;

for (int i = 1; i <= n; i++)

{

cur -= a[i - 1];

while (r <= n and cur <= k)

cur += a[r], r++;

if (cur <= k)

continue;

dp[r] += dp[i] + 1;

}

res = n;

res *= n + 1;

res /= 2;

for (int i = 2; i <= n + 1; i++)

{

res -= dp[i];

}

printf("%lld\n", res);

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}4. Funny Game

In a complete graph with n nodes, each node has a value a_i. The weight of the edge connecting node i and j is |a_i-a_j|. Find a spanning tree in this graph satisfying i divides the weight of the i-th edge.

I didn't realize the pigeonhole here, so I had a different approach.

A smaller i has an expectational bigger number of edges satisfying i divides its weight.

So, enumerate i in decreasing order, then use union-find to handle connected components.

The time complexity is O(n^2).

#include <iostream>

#include <list>

#include <cstring>

using namespace std;

const int N = 2e3 + 10;

int n, a[N], f[N], v[N], p;

bool fl;

using pii = pair<int, int>;

list<pii> ret;

inline int find(int x)

{

return x == f[x] ? x : f[x] = find(f[x]);

}

inline void merge(int x, int y)

{

f[find(y)] = find(x);

}

void run()

{

scanf("%d", &n);

for (int i = 1; i <= n; i++)

{

f[i] = i;

scanf("%d", a + i);

}

ret.clear();

for (int i = n - 1; i; i--)

{

memset(v, 0, i << 2);

fl = false;

for (int j = 1; j <= n; j++)

{

p = a[j] % i;

if (!v[p])

{

v[p] = j;

continue;

}

if (find(j) == find(v[p]))

continue;

merge(j, v[p]);

ret.push_front({j, v[p]});

fl = true;

break;

}

if (!fl)

return puts("NO"), void();

}

puts("YES");

for (auto &[tx, ty] : ret)

printf("%d %d\n", tx, ty);

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}5. Wooden Game

Too lazy to rewrite the statement again.

The contribution of a tree with a size of x is able to be any integer in range [1,x], regardless its structure, because we can remove its leaves one by one.

When I realized that, I f**ked up.

The time complexity is O(n\log n).

#include <iostream>

#include <vector>

#include <algorithm>

using namespace std;

const int N = 1e6 + 10;

int n, a[N], t, res;

void run()

{

scanf("%d", &n);

for (int i = 1; i <= n; i++)

{

scanf("%d", a + i);

for (int j = 1; j < a[i]; j++)

scanf("%*d");

}

sort(a + 1, a + n + 1);

t = 1;

for (; t < a[n]; t = t * 2 + 1)

;

res = 0;

for (int i = n; i; i--)

{

while ((t > a[i]) or (t & res))

t--;

res |= t;

}

printf("%d\n", res);

}

int main()

{

int T = 1;

scanf("%d", &T);

while (T--)

run();

}As you know, in this reason, 64-bit C++ compilers are temporarily disabled.

In order to keep using __int128 (at least partially) in 32-bit compilers, I'm trying writing a template of the unsigned version of __int128.

On 2024/3/15, I completed writing the alpha version of it.

If you find bugs in my code, please leave a comment below. Thanks!

UPD:

2024/3/14: added (maybe) all operators that an __int128 has except for operator/ / fixed infinite-recursion bug in several operators

2024/3/15: added operator/ / fixed many bugs in almost all arithmetic operators / completed first (buggy) version of this handmade __int128

Here's it:

#include<tuple>

#include<iostream>

#include<climits>

namespace RedshiftShine{

using ll=unsigned long long;

const ll ulmx=ULLONG_MAX;

const ll shift=64;

ll min(ll x,ll y){

return x<y?x:y;

}

ll max(ll x,ll y){

return x>y?x:y;

}

bool detect_overflow_add(ll x,ll y){

return x+y<min(x,y);

}

bool detect_overflow_minus(ll x,ll y){

return x<y;

}

class Custom_Unsigned_int128{

private:

ll higherInt,lowerInt;

public:

Custom_Unsigned_int128(){}

template<typename _Tp>

Custom_Unsigned_int128(_Tp x):

higherInt(0),

lowerInt(x){}

template<typename _Tp>

Custom_Unsigned_int128(_Tp x,_Tp y):

higherInt(x),

lowerInt(y){}

Custom_Unsigned_int128

(const Custom_Unsigned_int128& ele):

higherInt(ele.higherInt),

lowerInt(ele.lowerInt){}

std::pair<ll,ll>

base_access(){

return std::pair<ll,ll>{

higherInt,

lowerInt

};

}

Custom_Unsigned_int128&

operator=(const Custom_Unsigned_int128& x){

higherInt=x.higherInt,

lowerInt=x.lowerInt;

return *this;

}

template<typename _Tp>

Custom_Unsigned_int128&

operator=(const _Tp& x){

*this=Custom_Unsigned_int128

(

0,x

);

return *this;

}

template<typename _Tp>

Custom_Unsigned_int128

operator+(const _Tp& x){

return Custom_Unsigned_int128

(

higherInt+

detect_overflow_add(lowerInt,x),

lowerInt+x

);

}

template<typename _Tp>

friend Custom_Unsigned_int128

operator+(const _Tp& x,const Custom_Unsigned_int128& ele){

return Custom_Unsigned_int128

(

ele.higherInt+

detect_overflow_add(ele.lowerInt,x),

ele.lowerInt+x

);

}

Custom_Unsigned_int128

operator+(const Custom_Unsigned_int128& x){

return Custom_Unsigned_int128

(

higherInt+x.higherInt+

detect_overflow_add(lowerInt,x.lowerInt),

lowerInt+x.lowerInt

);

}

template<typename _Tp>

bool

operator==(const _Tp& x)const{

return

(

!higherInt and

lowerInt==x

);

}

template<typename _Tp>

friend bool

operator==(const _Tp& x,const Custom_Unsigned_int128& ele){

return ele==x;

}

bool

operator==(const Custom_Unsigned_int128& x)const{

return

(

higherInt==x.higherInt and

lowerInt==x.lowerInt

);

}

template<typename _Tp>

bool

operator!=(const _Tp& x)const{

return !(*this==x);

}

template<typename _Tp>

friend bool

operator!=(const _Tp& x,const Custom_Unsigned_int128& ele){

return !(ele==x);

}

template<typename _Tp>

bool

operator<(const _Tp& x)const{

return

(

!higherInt and

lowerInt<x

);

}

template<typename _Tp>

friend bool

operator<(const _Tp& x,const Custom_Unsigned_int128& ele){

return

(

ele.higherInt or

ele.lowerInt>x

);

}

bool

operator<(const Custom_Unsigned_int128& x)const{

return

(

higherInt<x.higherInt or

(

higherInt==x.higherInt and

lowerInt<x.lowerInt

)

);

}

template<typename _Tp>

bool

operator<=(const _Tp& x)const{

return

(

*this<x or

*this==x

);

}

template<typename _Tp>

friend bool

operator<=(const _Tp& x,const Custom_Unsigned_int128& ele){

return ele<=x;

}

bool

operator<=(const Custom_Unsigned_int128& x)const{

return

(

*this<x or

*this==x

);

}

template<typename _Tp>

bool

operator>(const _Tp& x)const{

return

!(

*this<=x

);

}

template<typename _Tp>

friend bool

operator>(const _Tp& x,const Custom_Unsigned_int128& ele){

return !(ele<=x);

}

bool

operator>(const Custom_Unsigned_int128& x)const{

return

!(

*this<=x

);

}

template<typename _Tp>

bool

operator>=(const _Tp& x)const{

return

!(

*this<x

);

}

template<typename _Tp>

friend bool

operator>=(const _Tp& x,const Custom_Unsigned_int128& ele){

return !(ele<x);

}

bool

operator>=(const Custom_Unsigned_int128& x)const{

return

!(

*this<x

);

}

template<typename _Tp>

Custom_Unsigned_int128&

operator+=(const _Tp& x){

return *this=*this+x;

}

template<typename _Tp>

Custom_Unsigned_int128

operator-(const _Tp& x)const{

return Custom_Unsigned_int128

(

higherInt-

detect_overflow_minus(lowerInt,x),

lowerInt-x

);

}

Custom_Unsigned_int128

operator-(const Custom_Unsigned_int128& x)const{

return Custom_Unsigned_int128

(

higherInt-x.higherInt-

detect_overflow_minus(lowerInt,x.lowerInt),

lowerInt-x.lowerInt

);

}

template<typename _Tp>

Custom_Unsigned_int128&

operator-=(const _Tp& x){

return *this=*this-x;

}

template<typename _Tp>

Custom_Unsigned_int128

operator&(const _Tp& x){

return Custom_Unsigned_int128

(

0ull,

lowerInt&x

);

}

template<typename _Tp>

Custom_Unsigned_int128

friend operator&(const _Tp& x,const Custom_Unsigned_int128& ele){

return Custom_Unsigned_int128

(

ele.higherInt,

ele.lowerInt&x

);

}

Custom_Unsigned_int128

operator&(const Custom_Unsigned_int128& x){

return Custom_Unsigned_int128

(

higherInt&x.higherInt,

lowerInt&x.lowerInt

);

}

template<typename _Tp>

Custom_Unsigned_int128&

operator&=(const _Tp& x){

return *this=*this&x;

}

template<typename _Tp>

Custom_Unsigned_int128

operator|(const _Tp& x){

return Custom_Unsigned_int128

(

higherInt,

lowerInt|x

);

}

template<typename _Tp>

Custom_Unsigned_int128

friend operator|(const _Tp& x,const Custom_Unsigned_int128& ele){

return Custom_Unsigned_int128

(

ele.higherInt,

ele.lowerInt|x

);

}

Custom_Unsigned_int128

operator|(const Custom_Unsigned_int128& x){

return Custom_Unsigned_int128

(

higherInt|x.higherInt,

lowerInt|x.lowerInt

);

}

template<typename _Tp>

Custom_Unsigned_int128&

operator|=(const _Tp& x){

return *this=*this|x;

}

template<typename _Tp>

Custom_Unsigned_int128

operator^(const _Tp& x){

return Custom_Unsigned_int128

(

higherInt,

lowerInt^x

);

}

template<typename _Tp>

Custom_Unsigned_int128

friend operator^(const _Tp& x,const Custom_Unsigned_int128& ele){

return Custom_Unsigned_int128

(

ele.higherInt,

ele.lowerInt^x

);

}

Custom_Unsigned_int128

operator^(const Custom_Unsigned_int128& x){

return Custom_Unsigned_int128

(

higherInt^x.higherInt,

lowerInt^x.lowerInt

);

}

template<typename _Tp>

Custom_Unsigned_int128&

operator^=(const _Tp& x){

return *this=*this^x;

}

Custom_Unsigned_int128

operator~(){

return Custom_Unsigned_int128(

~higherInt,

~lowerInt

);

}

template<typename _Tp>

Custom_Unsigned_int128

operator<<(const _Tp& x){

return x<shift?

Custom_Unsigned_int128(

higherInt<<x|(lowerInt>>(shift-x)),

lowerInt<<x

):

Custom_Unsigned_int128(

lowerInt<<(x-shift),

0ull

);

}

template<typename _Tp>

Custom_Unsigned_int128&

operator<<=(const _Tp& x){

return *this=*this<<x;

}

template<typename _Tp>

Custom_Unsigned_int128

operator>>(const _Tp& x){

return x<shift?

Custom_Unsigned_int128(

higherInt>>x,

lowerInt>>x|(higherInt<<(shift-x))

):

Custom_Unsigned_int128(

0ull,

higherInt>>(x-shift)

);

}

template<typename _Tp>

Custom_Unsigned_int128&

operator>>=(const _Tp& x){

return *this=*this>>x;

}

template<typename _Tp>

Custom_Unsigned_int128

operator*(const _Tp& x)const{

Custom_Unsigned_int128 ret(0),pr(*this);

_Tp tm(x);

for(;tm;tm>>=1){

if(tm&1)ret+=pr;

pr<<=1;

}

return ret;

}

template<typename _Tp>

Custom_Unsigned_int128

friend operator*(const _Tp& x,const Custom_Unsigned_int128& ele){

Custom_Unsigned_int128 ret(0),pr(ele);

_Tp tm(x);

for(;tm!=0;tm>>=1){

if((tm&1)!=0)ret+=pr;

pr+=pr;

}

return ret;

}

template<typename _Tp>

Custom_Unsigned_int128&

operator*=(const _Tp& x){

return *this=*this*x;

}

template<typename _Tp>

Custom_Unsigned_int128

operator/(const _Tp& x)const{

Custom_Unsigned_int128 ret(0),pr(x),trm(1),ts(*this);

while((!(pr.higherInt>>(shift-1))) and pr<=ts){

pr<<=1,trm<<=1;

}

pr>>=1,trm>>=1;

for(;;pr>>=1,trm>>=1){

// if(ts<pr)continue;

while(ts>=pr){

ts-=pr,ret+=trm;

}

if((pr&1)!=0)break;

}

return ret;

}

template<typename _Tp>

Custom_Unsigned_int128&

operator/=(const _Tp& x){

return *this=*this/x;

}

template<typename _Tp>

_Tp

operator%(const _Tp& x)const{

return (*this-*this/x*x).lowerInt;

}

Custom_Unsigned_int128

operator%(const Custom_Unsigned_int128& x)const{

return *this-*this/x*x;

}

template<typename _Tp>

Custom_Unsigned_int128&

operator%=(const _Tp& x){

return *this=*this%x;

}

};

}

void print(RedshiftShine::Custom_Unsigned_int128 v){

if(v==0)return;

print(v/10);

putchar(v%10+'0');

}

void test(){

RedshiftShine::Custom_Unsigned_int128 ci(0,1),cz(0,1);

print(ci),putchar(' ');

for(int i=128;i;i--){

std::swap(ci,cz);

cz+=ci;

print(cz),putchar(' ');

}

}

int main(){

test();

}Abstract

After I post some wrong advice and got a number of downvotes, I came up with this idea to recollect some upvotes. When I heard that Luogu will release their international version with problem statements translated with LLM, I imagined how would these models translate them.

Abstract

Suspend participating in Codeforces Rounds for a long time (at least a few months). During this time, try to participate in contests on other websites (AtCoder/Luogu/CodeChef or other). After that, when you participate in Codeforces Rounds again, your rating will surprisingly increase (at least for me). Here's why.

Abstract

Never use reference value on std::priority_queue, otherwise it will be affected when you push in something "greater" than it.

Abstract

- Never apply a binary search on

std::list. - If you want to make a custom

_Compfunction forstd::set, write down as many instructions to distinguish elements in the set as possible.

There are 3 unrated contests in AtCoder after I registered my account

| Name |

|---|