Edit: More dp tasks have been added to CSES since this blog was created. For solutions to those problems, check out UnexpectedValue's blog here.

I'm using bottom-up implementations and pull dp when possible. Pull dp is when we calculate each dp entry as a function of previously calculated dp entries. This is the way used in recursion / memoization. The other alternative would be push dp, where we update future dp entries using the current dp entry.

I think CSES is a nice collection of important CP problems, and would like it to have editorials. Without editorials users will get stuck on problems, and give up without learning the solution. I think this slows down learning significantly compared to solving problems with editorials. Therefore, I encourage others who want to contribute, to write editorials for other sections of CSES.

Feel free to point out mistakes.

Dice Combinations (1633)

dp[x] = number of ways to make sum x using numbers from 1 to 6.

Sum over the last number used to create x, it was some number between 1 and 6. For example, the number of ways to make sum x ending with a 3 is dp[x-3]. Summing over the possibilities gives dp[x] = dp[x-1] + dp[x-2] + dp[x-3] + dp[x-4] + dp[x-5] + dp[x-6].

We initialize by dp[0] = 1, saying there is one way with sum zero (the empty set).

The complexity is $$$O(n)$$$.

Minimizing Coins (1634)

This is a classical problem called the unbounded knapsack problem.

dp[x] = minimum number of coins with sum x.

We look at the last coin added to get sum x, say it has value v. We need dp[x-v] coins to get value x-v, and 1 coin for value v. Therefore we need dp[x-v]+1 coins if we are to use a coin with value v. Checking all possibilities for v must include the optimal choice of last coin.

As an implementation detail, we use dp[x] = 1e9 = $$$10^9 \approx \infty$$$ to signify that it is not possible to make value x with the given coins.

The complexity is $$$O(n\cdot target)$$$.

Coin Combinations I (1635)

This problem has a very similar implementation to the previous problem.

dp[x] = number of ways to make value x.

We initialize dp[0] = 1, saying the empty set is the only way to make 0.

Like in "Minimizing Coins", we loop over the possibilities for last coin added. There are dp[x-v] ways to make x, when adding a coin with value v last. This is since we can choose any combination for the first coins to sum to x-v, but need to choose v as the last coin. Summing over all the possibilities for v gives dp[x].

The complexity is $$$O(n\cdot target)$$$.

Coin Combinations II (1636)

dp[i][x] = number of ways to pick coins with sum x, using the first i coins.

Initially, we say we have dp[0][0] = 1, i.e we have the empty set with sum zero.

When calculating dp[i][x], we consider the i'th coin. Either we didn't pick the coin, then there are dp[i-1][x] possibilities. Otherwise, we picked the coin. Since we are allowed to pick it again, there are dp[i][x — <value of i'th coin>] possibilities (not dp[i-1][x — <value of i'th coin>] possibilities).

Because we consider the coins in order, we will only count one order of coins. This is unlike the previous task, where we considered every coin at all times.

The complexity is $$$O(n\cdot target)$$$.

Removing Digits (1637)

dp[x] = minimum number of operations to go from x to zero.

When considering a number x, for each digit in the decimal representation of x, we can try to remove it. The transition is therefore: dp[x] = $$$\min_{d \in digits(x)}$$$ dp[x-d].

We initialize dp[0] = 0.

The complexity is $$$O(n)$$$.

Note that the greedy solution of always subtracting the maximum digit is also correct, but we are practicing DP :)

Grid Paths (1638)

dp[r][c] = number of ways to reach row r, column c.

We say there is one way to reach (0,0), dp[0][0] = 1.

When we are at some position with a ., we came either from the left or top. So the number of ways to get to there is the number of ways to get to the position above, plus the number of ways to get to the position to the left. We also need to make sure that the number of ways to get to any position with a # is 0.

The complexity is $$$O(n^2)$$$, so linear in the number of cells of input.

Book Shop (1158)

This is a case of the classical problem called 0-1 knapsack.

dp[i][x] = maximum number of pages we can get for price at most x, only buying among the first i books.

Initially dp[0][x] = 0 for all x, as we can't get any pages without any books.

When calculating dp[i][x], we look at the last considered book, the i'th book. We either didn't buy it, leaving x money for the first i-1 books, giving dp[i-1][x] pages. Or we bought it, leaving x-price[i-1] money for the other i-1 books, and giving pages[i-1] extra pages from the bought book. Thus, buying the i'th book gives dp[i-1][x-price[i-1]] + pages[i-1] pages.

The complexity is $$$O(n\cdot x)$$$.

Array Description (1746)

dp[i][v] = number of ways to fill the array up to index i, if x[i] = v.

We treat i = 0 separately. Either x[0] = 0, so we can replace it by anything (i.e dp[0][v] = 1 for all v). Otherwise x[0] = v $$$\ne$$$ 0, so that dp[0][v] = 1 is the only allowed value.

Now to the other indices i > 0. If x[i] = 0, we can replace it by any value. However, if we replace it by v, the previous value must be either v-1, v or v+1. Thus the number of ways to fill the array up to i, is the sum of the previous value being v-1, v and v+1. If x[i] = v from the input, only dp[i][v] is allowed (i.e dp[i][j] = 0 if j $$$\ne$$$ v). Still dp[i][v] = dp[i-1][v-1] + dp[i-1][v] + dp[i-1][v+1].

The complexity is $$$O(n\cdot m)$$$ with worst-case when x is all zeros.

Edit Distance (1639)

This is a classic problem called edit distance.

We call the input strings a and b, and refer to the first i characters of a by a[:i].

dp[i][k] = minimum number of moves to change a[:i] to b[:k].

When we calculate dp[i][k], there are four possibilities to consider for the rightmost operation. We check all of them and take the cheapest one.

1. We deleted character a[i-1]. This took one operation, and we still need to change a[:i-1] to b[:k]. So this costs 1 + dp[i-1][k] operations.

2. We added character b[k-1] to the end of a[:i]. This took one operation, and we still need to change a[:i] to b[:k-1]. So this costs 1 + dp[i][k-1] operations.

3. We replaced a[i-1] with b[k-1]. This took one operation, and we still need to change a[:i-1] to b[:k-1]. So this costs 1 + dp[i-1][k-1] operations.

4. a[i-1] was already equal to b[k-1], so we just need to change a[:i-1] to b[:k-1]. That takes dp[i-1][k-1] operations. This possibility can be viewed as a replace operation where we don't actually need to replace a[i-1].

The complexity is $$$O(|a|\cdot |b|)$$$.

Rectangle Cutting (1744)

dp[w][h] = minimum number of cuts needed to cut a w x h piece into squares.

Consider a $$$w \times h$$$ piece. If it is already square (w = h), we need 0 cuts. Otherwise, we need to make the first cut either horizontally or vertically. Say we make it horizontally, then we can cut at any position 1,2,..,h-1. If we cut at position k, then we are left with two pieces of sizes $$$w \times k$$$ and $$$w \times h-k$$$. We can look up the number of moves to reduce these to squares in the dp array. We loop over all possibilities k and take the best one. Similarly for vertical cuts.

The complexity is $$$O(a^2\cdot b + a\cdot b^2)$$$.

Money Sums (1745)

This is a case of the classical problem called 0-1 knapsack.

dp[i][x] = true if it is possible to make x using the first i coins, false otherwise.

It is possible to make x with the first i coins, if either it was possible with the first i-1 coins, or we chose the i'th coin, and it was possible to make x — <value of i'th coin> using the first i-1 coins.

Note that we only need to consider sums up to 1000 $$$\cdot$$$ n, since we can't make more than that using n coins of value $$$\le$$$ 1000.

The complexity is $$$O(n^2\cdot \max x_i)$$$.

Removal Game (1097)

The trick here is to see that since the sum of the two players' scores is the sum of the input list, player 1 tries to maximize $$$score_1-score_2$$$, while player 2 tries to minimize it.

dp[l][r] = difference$$$score_1-score_2$$$if considering the game played only on interval [l, r].

If the interval contains only one element (l = r), then the first player must take that element. So dp[i][i] = x[i].

Otherwise, player 1 can choose to take the first element or the last element. If he takes the first element, he gets x[l] points, and we are left with the interval [l+1,r], but with player 2 starting. $$$score_1-score_2$$$ on interval [l+1,r] is just dp[l+1][r] if player 1 starts. Since player 2 starts, it is -dp[l+1][r]. Thus, the difference of scores will be x[l]-dp[l+1][r] if player 1 chooses the first element. Similarly, it will be x[r]-dp[l][r-1] if he chooses the last element. He always chooses the maximum of those, so dp[l][r] = max(x[l]-dp[l+1][r], x[r]-dp[l][r-1]).

In this problem dp[l][r] depends on dp[l+1][r], and therefore we need to compute larger l before smaller l. We do it by looping through l from high to low. r still needs to go from low to high, since we depend only on smaller r (dp[l][r] depends on dp[l][r-1]). Note that in all the other problems in this editorial, dp only depends on smaller indices (like dp[x] depending on dp[x-v], or dp[i][x] depending on dp[i-1][x]), which means looping through indices in increasing order is correct.

We can reconstruct the score of player 1 as the mean of, the sum of all input values, and $$$score_1-score_2$$$.

The complexity is $$$O(n^2)$$$.

Two Sets II (1093)

This is a 0-1 knapsack in disguise. If we are to have two subsets of equal sum, they must sum to half the total sum each. This means if the total sum $$$\frac{n(n+1)}{2}$$$ is odd, the answer is zero (no possibilities). Otherwise we run 0-1 knapsack to get the number of ways to reach $$$\frac{n(n+1)}{4}$$$ using subsets of the numbers 1..n-1. Why n-1? Because by only considering numbers up to n-1, we always put n in the second set, and therefore only count each pair of sets once (otherwise we count every pair of sets twice).

dp[i][x] = number of ways to make sum x using subsets of the numbers 1..i .

We say there is one way (the empty set) to make sum 0, so dp[0][0] = 1;

For counting number of ways to make sum x using values up to i, we consider the number i. Either we didn't include it, then there are dp[i-1][x] possibilities, or we included it, and there are dp[i-1][x-i] possibilities. So dp[i][x] = dp[i-1][x] + dp[i-1][x-i].

The complexity is $$$O(n^3)$$$.

Increasing Subsequence (1145)

This is a classical problem called Longest Increasing Subsequence or LIS for short.

dp[x] = minimum ending value of an increasing subsequence of length x+1, using the elements considered so far.

We add elements one by one from left to right. Say we want to add a new value v. For this to be part of an increasing subsequence, the previous value in the subsequence must be lower than v. We might as well take the maximum length subsequence leading up to v, as the values don't matter for the continuation to the right of v. Therefore we need to extend the current longest increasing subsequence ending in a value less than v. This means we want to find the rightmost element in the dp array (as the position corresponds to the length of the subsequence), with value less than v. Say it is at position x. We can put v as a new candidate for ending value at position x+1 (since we have an increasing subsequence of length x+1 + 1, which ends on v). Note that since x was the rightmost position with value less than v, changing dp[x+1] to v can only make the value smaller (better), so we can always set dp[x+1] = v without checking if it is an improvement first.

Naively locating the position x with a for loop gives complexity $$$O(n^2)$$$. However, dp is always an increasing array. So we can locate x position by binary search (std::lower_bound in C++ directly gives position x+1).

The final answer is the length of the dp array after considering all elements.

The complexity is $$$O(n\cdot \log n)$$$.

In this task we were asked to find the longest strictly increasing subsequence. To find the longest increasing subsequence where we allow consecutive equal values (for example 1,2,2,3), change lower_bound to upper_bound.

Projects (1140)

Even though days can go up to $$$10^9$$$, we only care about days where we either start or just finished a project. So before doing anything else, we compress all days to their index among all interesting days (i.e days corresponding to $$$a_i$$$ or $$$b_i+1$$$ for some i). This way, days range from 0 to less than $$$2 n \le 4\cdot 10^5$$$.

dp[i] = maximum amount of money we can earn before day i.

On day i, maybe we just did nothing, so we earn what we earned on day i-1, i.e dp[i-1]. Otherwise, we just finished some project. We earned some money doing the project, and use dp[start of project] to know how much money we could have earned before starting the project. Loop through all projects finishing just before day i, and take the best one.

The complexity is $$$O(n\cdot \log n)$$$, log comes from day compression.

Hello. Thanks for editorial. Do you have links to above problems or where I can submit them. I want to try all of them as they look good from IPOV.

https://cses.fi/problemset/

Cool, thanks. I already recommend CSES problem set to some people and I will now link to this blog for dp. Just next time consider using names like

waysorbest_scorein the future instead ofdpin every single problem — if you want to then publish your codes to help others.No, as a beginner, I prefer the editorials to use dp everywhere instead of having different names. We can analyze and understand which quantity has to be taken as the dp ourselves. Every question looks new if we use different names everywhere — realizing the underlying concept is the same becomes harder.

No, as another beginner who's newer than you, I definitely agree with Errichto. Descriptive names are essential for code readability.

the beginner whos newer surpassed the og beginner, damn

In my opinion, open editorials to CSES problems (with code!) goes against the spirit of the platform. Not providing solutions or access to accepted submissions must have been a conscious decision by the maintainers.

I thought about that, and therefore asked pllk for permission before writing the editorial. He encourages editorials for the problems. However, he mentioned that it might cause some people to read the editorial without thinking about the problem.

meooow I think you are wrong here. I agree one should think before rushing to editorials but having no editorials forces one to leave the question without any further learning and development.

Thanks to icecuber who invested his precious time in writing some editorials and pllk for allowing it.

Having no editorial is not enough reason to leave a problem. There are many avenues to ask for help once you have truly exhausted all approaches you can think of.

However, since pllk approves of this, my point of complaint isn't valid.

Can you kindly suggest some of the many avenues you mention of? And are they more effective and efficient than having the editorial at your disposal?

Asking for help FAQ

Is the answer you get by asking for help going to be better than an editorial? Maybe not.

Is the absence of an editorial going to make you work harder to solve the problem? Absolutely yes.

Please do not think that I am preaching for a world with no editorials. It is just that the design of the CSES problemset implies that it is meant to solved by putting in maximum individual effort.

Well, I believe that every problem should have an editorial. Let's say I put in 2 days worth of effort solving a problem without making any real progress then an editorial guarantees me closure for my efforts. On the other hand, asking help from others doesn't provide me that certainty. Especially for the guys like me who don't have coding circles where we can ask for help from friends. Cyans(or lower rating) people asking for help from a random person usually gets ignored. That's just what I have observed and my opinion.

Idk, I usually answer people that ask nicely. Show the problem source and more people might help.

Thanks for this. I love the work here. CSES needs more editorials like this; sometimes even just stalking people's code isn't enough to understand what's going on. I hope you keep going with this too.

How to see other peoples submission in CSES.fi ?

I meant looking at people’s GitHubs or something like that since you can’t view other solutions until you solve it yourself

Thanks to this blog, I go back to my account on CSES problem set. I found out that I didn't solve 2 problems in DP section (Got

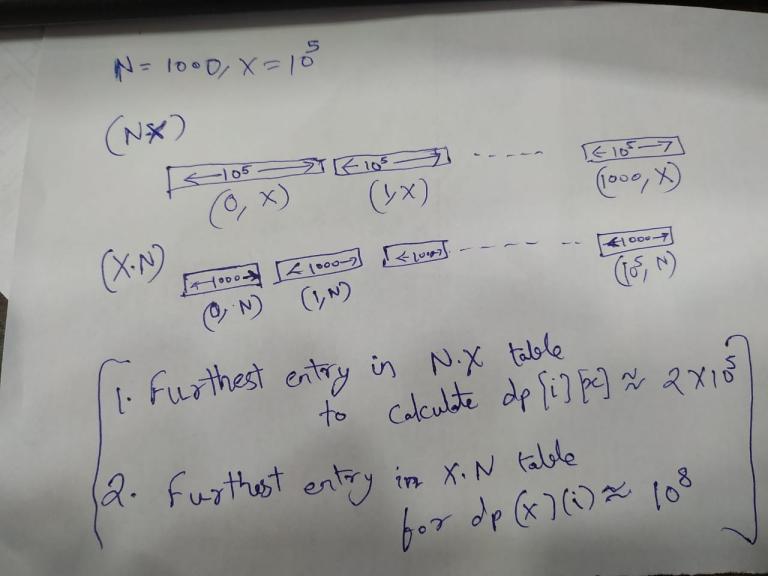

TLEseveral testcases). I solved it and AC in first submit, but the logic is the same as I have solved it before. Lmao =)))I've gotten two more questions about TLE in Coin Combinations II, so I guess it deserves a comment. Since we are doing $$$10^8$$$ operations in one second, we need those operations to be efficient. This means we can't have many cache misses.

You get cache misses by accessing array entries that are far away from each other. My implementation loops through

i, thenj. It accessesdp[i][j],dp[i-1][j]anddp[i][j-x[i-1]].If you order your loops differently (

j, theni), or usedp[j][i]instead ofdp[i][j](so you swapped the meaning of the dimensions), you will likely get TLE.In terms of rules of thumb, we see that the dimension containing

j-x[i-1]varies most, and therefore put it as the inner dimension of the dp array. And we loop through the dimensions the same order as the dimensions of the array. Below are some more detailed explanations.If we loop through

i,j-x[i-1]varies a lot, this means cache misses. Therefore we need to loop throughiin the outer loop. Looping throughjgives contiguous memory accesses, so we get few cache misses by havingjas the inner loop.If you define your array as

dp[j][i]instead ofdp[i][j], thendp[j-x[i-1]][i]goes far fromdp[j][i], compared todp[i][j]anddp[i][j-x[i-1]]. This is because the outer dimension gives smaller distance in memory than the inner dimension (you can think ofdp[i][j]as accessing index $$$10^6 i + j$$$ in a linear array, if the second dimension has size $$$10^6$$$).Is it the same reason (that O(n * sum) solution requires 108 ops, in worst case) and hence top-down is not feasible due to extra overhead of recursion ?

include<bits/stdc++.h>

using namespace std;

define fast ios_base::sync_with_stdio(0);cin.tie(0);cout.tie(0);

define pb push_back

define ll long long int

ll mod = 1e9+7; const int N = 1e5+7;

int dp[101][1000001];

int main(){ fast; int n , val;

}

This Code is still giving TLE ..Can you please let me know where its going wrong? Its the same code as yours!!

use int instead of long long

May i know what difference will it make please.

blog

I solved the problem in a different way: https://cses.fi/paste/592407cb546d6d442325ac/

It passes all the test cases but I'm still confused why it works, since everyone it's using 2d dp...

This is a solution of Coin Combinations I while others are talking about Coin Combination II.

I was particularly struggling with this, and your guidance helped me see the solution. I appreciate the time and effort you took to explain things so clearly and patiently.

In Book Shop , if I buy the

i'thbook then shouldn'tx-price[i]money be left for the remainingi-1books , so I can't see why x-price[i-1] money is left for the remainingi-1books as that would imply that the money left is after buying the(i-1)'thbook. So if icecuber or anyone else could please explain this it would be a great help. Thanks.The array "price" is 0-indexed, so the price of the i'th book is price[i-1].

I feel so dumb for asking such a question as now it is obvious what you were trying to say. And I am sorry for asking something like this. I am thankful to you for answering and also for the editorial. Thanks icecuber.

For Coin Combinations II (1636), my solution getting TLE in 3 TCs. Unlike editorial, I'm trying top-down approach. Can someone help please?

TLE on 100 1000000? I don't know but I think top-down will be slower when the table is filled-up

In Two Sets II problem,

Because by only considering numbers up to n-1, we always put n in the second set, and therefore only count each pair of sets once (otherwise we count every pair of sets twice).Why putting n always in second set gurantees unique sets? How to prove above statement.

Since you aren't having 'n' in your first set, you'll never have a first set that consists of a combination involving 'n' i.e. all the times a combination requires 'n' you are simply not counting it... thus you end up counting things only once.

Please do the same for "Graph Algorithms" section. Thanks.

please provide editorial for graphs problem. Thanks:)

It takes a long time to write up an editorial like this, and the graph section has like a billion problems. If icecuber provides an editorial that's great, but there exists code on github for all the problems so if you're stuck you shouldn't rely on him making an editorial, as he may not want to. Notice how people asked 7 months ago (comment) and he still has not, so I doubt it'll happen smh. No need for people to keep asking.

Check out the AC codes for all CSES dp problems here:

https://github.com/pratikkundnani/cses-dp-solutions/tree/master

I will keep updating the repository! I will be solving graph problems too.

Star it if you liked it.

Thank you!

Can you write an editorial for the graph section too...it will be a great help..

Why my code for Minimizing Coins gives TLE for some test cases, even it's complexity is also O(n*target)?

The

i%arr[j]is causing the TLE I believe. I removed it, since it isn't required asdp[i-arr[j]]will handle it.This is because the constraints are already too tight and modulo operation is time costly operation. Also, sorting

arralso improved the time a bit, but major contribution was made by remove the module operation.Hope this helps.

Sorry for the poor formatting, I am not able to figure out a better way.

Can anyone explain the matrix expo solution to Dice Combinations? It has much lower complexity ($$$6^3 \log n$$$ afaict). This is the code, for example, which used the matrix

raises it to the power $$$n$$$, and then prints

dp[0][0]as the answer. Can someone explain how do we come up with this matrix in the first place?Check CP handbook's matrix chapter's linear recurrences section.

Please give the link of CP handbook , you mentioned above.

CP Handbook

Thanks a lot icecuber! I found your editorial super useful.

It was hard for me to understand the Removal Game (1097) solution at first. I even found another version here, and struggled comprehending it as well :-) Like, I saw how the code could possibly work, of course, but the trail of thought leading to that code was eluding me. So I found my own trail then, which may be somewhat more beginner-friendly. So, here it is:

Given the input numbers

xs, I started with two arrays:A[l][r],B[l][r]-- the scores for each player, when they have the first move on the range of[l, r]. Now, say, I'm the player A, and I pull from the front of the range -- thexs[l]. Lettbe therange_sum(l, r). Then,t = xs[l] + B[l + 1][r] + alpha, which leads toalpha = t - xs[l] - B[l + 1][r]. Thus,A[l][r] = xs[l] + alpha = t - B[l + 1][r]. Similarly, if I pull from the back of the range,A[l][r] = t - B[l][r - 1]. The optimal strategy is to take the largest of the two numbers.Next, we make an observation, that the

Awill be identical toB, and we only need one DP matrix.What is the alpha here?

and can u explain how A and B and identical?

Oh, right. I didn't explain that

alpha, did I?alphais whatever remains fromA[l][r]afterxs[l]is taken:alpha := A[l][r] - xs[l]. It's just a temporary variable that helps in deriving the recurrence.Now, regarding the sameness of

AandB. As defined,A[l][r]is the top score for a player, when they have the first move on the range of[l, r]. And, whether that player 1st or 2nd is, is fully determined by the parity (even/odd) of the interval's length:r - l + 1. Thus, designating theAfrom theBis actually superfluous.That's clear now

thanks

In the edit distance problem, it says -- "When we calculate dp[i][k], there are four possibilities to consider for the rightmost operation. We check all of them and take the cheapest one."

Why do we only need to consider the rightmost operation? I feel this is worth discussing (either in the post or as a reply to my comment).

In Coin Combinations 2, you don't need extra dimension/state. dp[x + 1] = {0} would work fine. dp[0] = 1. dp[i] represents no. of ways to reach value 'i' with coins considered so far. So loop through coins in any order, and update values of future states of dp by looping through previous states of dp from left to right i.e. from 0 to x. That is dp[current_coin + state] += dp[state];

Space: O(target)

Time: O(target * n)

Can you please clarify your approach?

you just did the same as coin combination I but you did it with the forward approach or the push DP so I'm very confused right now why the push DP here counted only the unique solutions ?

In Coin Combinations II(1636) shouldn't the vector x be sorted?

I am making detailed video explanations for some of the problems of this section, I will keep updating this comment whenever I add a new video. Along with the explanation I live code the solution at the end of the video and submit the code on the platform.

Interested may check the following videos.

Dice Combinations: https://youtu.be/5gd5jptXWAM

Coin Combinations: https://youtu.be/-pXjopzMVrE

Grid Paths: https://youtu.be/V64F4wlodUM

Book Shop: https://youtu.be/qpNy2nuSuaI

Also added on my channel videos for coin combinations 2, array Description, removing digits, edit distance, Rectangle cutting and projects.

Hopefully people will find this helfpul.

Links to Video solution for all problems can be found here: https://mirror.codeforces.com/blog/entry/80064

I have been trying the problem Book Shop for a very long time now. I am using the same logic as given by you. Still, it is giving me TLE. Is there any other approach for this question or some optimization that I can do on my code:

Here is my code:

Incase you missed it, I have replied to your comment on my youtube video: "Honestly it's sad that the time limit on the platform is very tight. Try changing the loops, make outer loop for n and inner loop for x and it should pass. Try it and tell me if it works. I'll give you a plausible explanation why this works."

Yes, I just did that and it worked. But, I have no idea why this happened. The time complexity for both of them is same but just interchanging the loops is reducing the time it is taking for the program to run. Looking forward to your explanation on this. And yes, Thanks a lot.

I am no expert but here is what I can tell you, There is something known as locality of reference, if your program uses a part of the memory which is located in contiguous memory addresses(or nearby memory addresses) it will execute faster.

Why is that so?

Caching. The OS(again I am no expert, but hopefully someone will put more light on this) will cache some of the regions of the data stored in main memory, accessing cache is much faster than accessing main memory.

Ever Thought why iterating an array is much faster than iterating a link list of same size even though asymptotically both of them are O(N) operations.

Try laying out your 2d array and think which of two approaches will make more compact memory region accesses.

Hope this helps!

Thanks for the reply again but correct me if I am wrong, If I have 2D array of N*X and another of X*N and in both of them I iterate wouldnt it take the same time. I understood the part that contiguous memory will be faster to iterate on but aren't both the loops doing the same thing. In both cases we are iterating on contiguous memory locations. In the first case, we will do like X iterations N times and in the second we will do N itetations X times. So, does it affect the running tume considering X is greater than N. Sorry for the trouble but it would really help me if you van explain a little by taking an example. How about you explain this when N is 2 and X is 5. This will really clear the doubt.

keeping in mind caching and locality of reference think which of the two recurrences will likely evaluate faster:

1. dp[i] = f(dp[i-1])

2. dp[i] = f(dp[i-some_random_number(1,i)])

In main memory 2d, 3d, 4d all arrays are flattened and stored as 1d arrays. So flatten N.X array into N arrays of size X each and X.N array into X arrays of size N each.

Take 5mins and then check if this pic helps you a bit:

Even I would appreciate a response from someone who is an intermediate or expert at COA and OS concepts but till then this is the best explanation I can come up with.

Even I would appreciate a response from someone who is an intermediate or expert at COA and OS concepts but till then this is the best explanation I can come up with.

Ohhh I think now I am having a much better understanding of this thing. The picture was quite explanatory. I looked at both of my codes and realised this is actually quite sensible. When I am doing dp[i-1][j- some random number] it is like going 1 time back in 10^3 array and then it is about 10^5 places to move in the end to reach j-some random number. But in case I do dp[j-some random number][i-1] it will go back in 10^5 array which has 10^3 size sub array and this will happen arbitrary number of times so it becomes 10^8. I guess this is only what you are trying to say. Thanks for the wonderful explanation, really appreciate your effort to explain this. I have understood it.

Yes that is what I was trying to say, I am glad it helped :)

bro,can you send solution for elavator rides question

Thanks a lot for this! Best problems for beginner in DP and editorial is perfect for beginner to understand dp!

This is my code for (Two sets II) problem. My approach:-

dp[i]= number of ways to make sum using subset 1..i.Can anyone help me find my mistake?

you can't make dp[sum]/=2 after module

you need to use modular inverse for the divison problem

hey did anyone else get a runtime error (like heap space error or something) on coin combination 2 or is it just my code?

Can someone explain why the solution for increasing subsequence works? Specifically I am confused about how we can replace an element in the dp array with an element later found. Doesn't this not make dp a subsequence?

I Hope, This may help anyone!

i didn't get about removal game can anyone make me understand why we have to take the mean at the end

As stated, score1 + score2 = sum. With our dp, we calculate max(score1 — score2).

Add both of these, sum + (score1-score2). = score1 + score2 + score1 — score2 = 2 * score1

Divide by 2 to get max score1.

Hey! Can someone give the formal proof for the Greedy Solution of 'Removing Digits'?

I tried to formulate a proof, but no luck :(

Thanks for the great editorial. I solved some problems on my own. If I didn't got the logic for some, I read the editorial and got the idea. Some problems involved some good tricks which I learnt. Thanks a lot.

Two Sets II (1093)

for this problem why the recursive solution works, even if we take n instead of n-1.

When current = 1 and sum = 1 when you go to solve(0,0) you returns 0 because of condition current<1 returns 0

your code is not taking 1 in the first set instead of n

The problem Projects — 1140 can also be solved without using the compression technique.

The problem can be treated as a 0-1 Knapsack optimisation problem with one extra constraint that the events performed should not overlap. For evaluating this constraint, sort the events by their start times alone, so that we can use upper bound of an event E to find the index of an event which can be performed after an event E.

See the implementation below for more details.

Why does CSES problem Set give TLE in some problems (in one or two test cases), even if my asymptotic time complexity is the same as that of the intended solution?

In Rectangle cutting, one can exploit symmetry and stop both the innermost loops at i/2 and j/2 respectively.

Hey I am stuck in problem of Counting towers of DP section CSES problem set can someone plz help !! Thanks in advance..

i found this useful: youtube video solution

Code for new problem counting towers.

Thank you for your useful comment, but I'm too foolish to understand the code. :<

So could you please explain it a bit?

It would mean a lot if you could help me by taking some time off from your busy schedule.

Basically

dp[i]means the number of ways to build a tower of heighti. The next index means if the tile at theith place is split or not. That is, if0the tile is split (2 tiles of 1 x 1) and if1we have a single tile of2 x 1.Finally,

dp[i][0]means the number of ways to build a tower of heightisuch that the top tile is split ( 2 tiles of 1x1) anddp[i][1]means number of ways to build a tower of heightisuch that the top tile is continuous (1 tile of 2 x 1)Now for the recursive function:

If the last tile is split (

dp[i][0]), it can have the following options:dp[i - 1][0]dp[i - 1][0]dp[i - 1][0]dp[i - 1][0]This means

4*dp[i -1][0]And the last case, it can add split tiles over the continuous tile of

i - 1th height:dp[i - 1][1]dp[i][0] = 4 * dp[i - 1][0] + dp[i - 1][1]Similarly if the last tile is continuous (

dp[i][1]):dp[i - 1][1]dp[i - 1][1]dp[i - 1][0]dp[i][1] = 2 * dp[i - 1][1] + dp[i - 1][0]Hope this helps!

PS: Use an int array instead of vector in the code otherwise you'd get a TLE

It would be great if everyone could enclose their code into a spoiler, it creates unnecessary mess sometimes while navigating.

I was trying to solve these problems using recursive dp, I was facing an issue in the Book Shop problem, I was getting runtime error on large test cases though I feel I am using the same space as required in iterative dp. Link to my solution.

icecuber For Two Sets II, why won't taking it all and dividing my 2 work?

My code: https://cses.fi/paste/1ea2ecdcdcc46e54190a53/

Is it because of mod?

yes, it's because of the mod. However for odd mod, you can divide by 2 by multiplying with 1/2 which is just (mod+1)/2.

Wow! Thank you so much :-)

Just for information if mod is prime and you have to divide it by x, then multiply the numerator by pow(x,mod-2). Do not use predefined pow function. Create your own pow function to handle mod.

please add editorial for counting towers problem https://cses.fi/problemset/task/2413

See OEIS A034999

Consider the top row of a rect filled with n rows. There are two cases. The both cells of the top row can belong to one element, or to two elements.

In both cases we can formulate how the numbers are calculated for n+1 rows.

Bookmarked this blog. Thanks a lot :)

What about the Elevator Rides?

A hint would help. It seems to be some form of bitmask dp, but anything I implemented goes TLE :/

Here one of my

My bitmask dp state was dp[mask] = minimum elevator rides possible for people in the mask and the weight less than x . Its a pair of the form {minimum elevator rides, weight}. For every mask, the people are fixed, so we try finding the minimum elevator rides for them by adding each person to the mask individually and check if the elevator rides were enough(by checking that weight of people stays less than x) or we need to add another ride to accommodate the new person.

Check pages 103-104 of the CP Handbook.

can anyone tell me why my code is giving runtime error on some of the test cases for problem Coin Combinations II

Thanks in advance.

update: I found my mistake.changing the type of array dp from ll to int worked.

Coin Change 2 give TLE with O(n*target) time complexity

solution link: https://cses.fi/paste/c0e3ad19fd184ec3227a11/

While defining your dp vector just change it from vll dp(amount+1,0) to vi dp(amount+1,0). Using 'long long' instead of 'int' is probably why you're getting TLE

I tried it and got TLE again:https://cses.fi/paste/04b6ac464bf1ec0b228547/

Here have a look at this : https://cses.fi/paste/262b104a22044a1a22864a/ Just changed the way you applied your mod operation and the code got AC!

Yes got AC. Thanx. But why my code gave TLE even changing after vector to vi

Can anyone give me some hints or kind of editorial to solve the problem, Counting Towers?

Thanks in advance.

There are some youtube video explaining the approach, you can check it out. It helped me understand how to approach these kind of problem.

In the book shop question ,I used the same approach but got Runtime error when I used long long int and with int ,my solution got accepted. Why is this so?

I don't know why but the recursive approach is giving me TLE for some of the problems.

CSES grader is slower than CF, and recursive approach has higher constant factor than bottom-up.

A doubt Regarding that edit distance problem, would it not be fine if we just do it like. dp[i][nb,nb-1]... i goes all the way from 0 to na, but k takes only either nb or nb-1. i dont see any point of storing for all the k from 0 to nb...when we are just trying to make a equal to b and using only k and k-1. let me know if it makes any sense....thanks!

For Two Sets II (1093), the article above says the time complexity is O(n^3), isn't it supposed to be O(n^2)? Am I missing something?

Also, the solution seems to get accepted even if you include the nth element and not divide the final count by 2? See https://cses.fi/paste/c3ec33b10e14f1392ddcb6/

Target = n *(n + 1) / 4; for(i...n) for(j...target) => n * n * (n + 1)/4 => O(n^3);

I made a YouTube playlist where I solved all the problems. Check it out here: https://www.youtube.com/watch?v=vuEWTjqf9Wo&list=PLC6tU0n3mgCL-WyIIxzNt5BEgPpquufeB&ab_channel=AnthonyNgene

Good luck.

thank you so much, I was struggling to understand Removal Game , until I watched your video.

Can Someone Explain to me why does a Greedy Algorithm does not give the correct answer in the problem Rectangle Cutting Link. What I am doing is to cut the maximum possible square I can at every step, Let's say at the current step the size of the rectangle is a*b (a < b); then i cut a square of size a*a; after which the size of the rectangle becomes a*(b-a), and I do this until the size of the rectangle becomes 1*1.

I was not able to come up with an argument as to why this does not work, it seemed pretty intuitive to me and thus I just wanted an explanation proving it wrong

Take a $$$5 \times 6$$$ rectangle. Here's what you do vs a better solution.

Really the better question is to ask "why would greedy be correct", not "why is greedy wrong". Greedy is usually wrong; if it is not, there is a good reason for it.

I was doing the same bro but its gives me wrong answer

Isnt the removing digit code actually n*log(n) since for each number we have to iterate over all its digits and the number of digits grows logarithmically?

Rectangle Cutting Problem gives tle when i write i use recursion and memoisation

int solve(int a,int b,vector<vector>&dp){ if(a==b)return 0; int mini=INT_MAX; if(dp[a][b]!=-1)return dp[a][b]; for(int i=1;i<=a-1;i++){ int aa=solve(i,b,dp)+solve(a-i,b,dp)+1; mini=min(mini,aa); } int x=mini;

for(int i=1;i<=b-1;i++){ int bb=solve(a,i,dp)+solve(a,b-i,dp)+1; mini=min(mini,bb); } return dp[a][b] = mini; }is it possible to solve coin combination II recursively, I was doing this for long time and it is give me TLE

Can you share your submission link?

https://cses.fi/problemset/result/6586952/

The time complexity of your code is around O(n^2 * k) which will not be accepted under given constraints and time limit

but my iterative solution worked which has the same time complexity https://cses.fi/problemset/result/6602922/

The time complexity of your iterative solution is O(n * k), whereas that of recursive solution is O(n * n * k)

can you share me some resources on how to calculate time complexity of solutions of dp

I didn't find elevator problem here

in Two Sets II you can also start iterating with j from 1 instead of 0 and that solves the problem of counting every combination twice. The answer will be stored in dp[n][n*(n+1)/4]

For Removal Game it looks like there are multiple ideas. Some of them work in Python. My idea TLEs.

If player 1 is to move, then score is the element. If player 2 is to move, then score is just 0 for player 1. From there, you go inductively, going over all games of length 2, length 3 etc. The update rule is simply:

Player 2 will do whatever minimizes the outcome for player 1, because then she obviously gets the rest of the total sum for herself. Player 1 does whatever maximizes his sum.

I guess reducing this to 1 DP matrix (by thinking about total sums), and then reducing that to 1-dim DP array (by thinking about prefix sums), makes this not TLE for Python. I guess it's not as trivial to get there.

I am not able to understand the code of Removal Game CSES problem , Can anyone please explain me? As per my understanding this code should be going out of bound but it is working fine!! ``

``

... `#include <bits/stdc++.h> using namespace std;

int main() { int n;

cin >> n;

vector x(n);

long long sum = 0;

for (int&v : x) { cin >> v;

}

vector<vector> dp(n,vector(n));

for (int l = n-1; l >= 0; l--) {

for (int r = l; r < n; r++) { if (l == r) { dp[l][r] = x[l]; } else { dp[l][r] = max(x[l]-dp[l+1][r], x[r]-dp[l][r-1]); } }}

cout << (sum+dp[0][n-1])/2 << endl;

}`

How is it supposed to go out of bounds? The only condition when it could have gotten out of bound was when l = n-1 or l = 0 because in both cases, when l == r, r could've gotten 0-1, or l could've gotten (n-1)+1. But since you're dealing with this condition, when l == r, you're just assigning dp[l][r] = x[r] in the if-else condition. Hence, another block, which might cause out-of-bound, will not execute.

I know it's an old blog, but why does everyone usually use iterative do? I don't understand it and use recursive but like it fails a test usually.

The increasing stack size might lead to a TLE / MLE in recursive code. Iterative codes are a bit more efficient in that respect. Another reason is that there are some questions that can be solved only using an iterative approach. One such problem.

Hi, have you seen array description problem in cses? Doesn't it feel like it can be solved without dp.

like this:

The code written for the question ** Coin Combinations I (1635)**, the test case where n = 3, x = 1001 and array = {1, 1500, 1000} gives answer 3 but the correct answer is 2. If correct answer is not 2 and instead 3, please explain how ?

there are 3 ways {1,1000},{1000,1},{1,1,1,..(1001 times)..,1} hence ans is 3 not 2.

would have been 2 if asked for discrete combination i.e "Coin Combinations II"

why my code for grid paths problem (1638) gives runtime error for test case 5,6,7,8 on CSES judge?

Please help!!!

Looking at the solutions for Removal Game on cses.fi it seems that all top solutions (i.e. https://cses.fi/problemset/hack/1097/entry/6190993/) utilize an observation that for every three consecutive coins c1 c2 c3 where c1 < c2 > c3 one of the players always takes c1 and c3 and the other always takes c2. Using this observation we can reduce each such coin triplet to a single coin (c1) (c2) (c3) -> (c1 — c2 + c3) until the input becomes a bitonic array (decreasing up to some point after which increasing), on such array the problem can be solved greedily. After many futile attempts at proving the observation I tried breaking it with millions of testcases but it didn't fail once so it is probably valid. Can anybody help me with the proof?

Two Sets II (1093):recursion with memo

https://cses.fi/paste/36a7d36bf570d45e91887f/

Coin_Combination_II

What about this easy code that i construct in my own..

In the 'removal game' problem. There is a straightforward (not exactly straightforward) approach, which is as follows —

we define

dp[i][j]as the maximum score the first player can get within range of I to j if he starts first.The transitions are as follows —

if

i == j, thendp[i][j] = a[i].if

j == i+1, thendp[i][j] = max(a[i], a[j]).(note that the first player makes the first move in the range (i, j). Hence, he will pick the maximum element).

When

j > i+1,dp[i][j] = max(a[i] + min(dp[i+2][j], dp[i+1][j-1]), a[j] + min(dp[i][j-2], dp[i+1][j-1])).Suppose the player first picks the i-th element, then his score will increase by a[i]. But what will be the next state, dp[i+1][j]? No. Since the next turn will be of the second player, he will again pick the maximum element from the available range (i+1, j). Hence, if the second player picks the first element from this range (i+1, j), the next state, where the player first can again make a move, would be (i+2, j). Another possibility is when the player second picks the last element from that range; in this case, the available range for the first player to again make a move would be (i+1, j-1). Since the second player is picking these elements, we must ensure that this total score is minimum. Hence, we take the minimum of both possibilities and add it to the current score of the first player. Similarly, other events will follow if the player picks the last element first. In the end, we take the maximum of these two. Our final answer would be stored in dp[0][n-1], which is nothing but the maximum score the player first can get if he starts the game in the range (0, n-1) (length of the array).