Hi!

As many have noticed, sometimes problem ratings were assigned in a strange way that was not consistent with expectations. For example, ratings for complex problems of Div3 rounds were often overestimated. This was mainly due to the fact that high-ranking unofficial participants did not try such problems. It turned out that despite the high rating of a participant, a problem is not solved by the participant, and this fact raised the rating of the problem. It is not entirely correct to take into account only official participants since ratings for difficult problems are sometimes more accurately determined by unofficial participants.

Somewhere in the comments, I've read that problem ratings are set manually. Of course, this is not so. The process is automated, but I start it manually (I will fix it somehow).

I changed the formulas for calculating problem ratings, now they slightly better correspond to expectations. New problem ratings are already available on the website. I don't think they are perfect (but I hope that they are much better). If somewhere ratings obviously are wrong — it would be great to see such examples in the comments.

Thanks!

UPD 1: Thank you for examples of unexpected problem ratings. I'll try to fix them (I don't think that it is possible to fix all of them without manual work) and return with an update.

UPD 2 [May, 2]: I made another attempt to adjust the coefficients, to take into account some facts differently. The ratings are recalculated again. I carefully went through most of the comments and indicated new ratings. Now it looks a little better. I afraid, there are still some issues with some problems. Try to find them and demonstrate them in the comments. Thanks!

Is there a way to assign difficulty to the special problems. Or maybe hide them from the sorted by difficulty list of problems altogether?

One of straightforward ways to start solving problems is going through archive in increasing order of difficulty, but you need to skip to the second page to do that.

What do you think about such quick-fix: put non-rated problems to the end of the list (after most rated problems)?

UPD: I just removed unrated problems from the sorted list of problems.

kinda works, but I'm sure some of the more successful contestants are using descending order of difficulty to warmup XD

This is a good update, particularly since problem recommendation bots, i.e https://github.com/cheran-senthil/TLE use these problem ratings.

Hope everyone can ;gitgud faster now

Have you used this? I rely on https://recommender.codedrills.io/.

:)

oh i think taking the users opinions could help, like some one who solved the problem in a hard way will give some rating to his solutions i think this may stable the rating :)

If you mean let the users give ratings to problems, and calculate the rating of a problem by something like the average value of these ratings from the users, then it's probably not a good idea.

It sounds good, but it's hard to judge the difficulty manually, and it could be abused. It is especially inaccurate when a problem is solved by only a few people, and it is hard to correct the difficulty of a problem solved (and voted) by lots of people. People often follow others in the voting, just like to upvote/downvote a comment.

In the Chinese online judge Luogu, the difficulties of problems were judged by users' votes, and they were far more inaccurate than Codeforces. Now Luogu has dropped voting for problem difficulties, and now the difficulties are set manually by the problem uploaders and the admins, though users can send feedback on the difficulties and hopefully they will be corrected.

However, it could be a good idea if the votes are used to improve the rating calculation algorithm instead of directly change the problem ratings.

For difficulty, besides taking in the rating of people who solved it during a contest, perhaps it should take duration of the problem and number of tries as well.

Perhaps there should be a separate "popularity" concept (for eg. on leetcode, you can see the likes and dislikes for any problem). "Popularity" is different from "Difficulty", but may be useful as a second dimension. So if I'm looking for a binary search problem in the 1800 difficulty level, I would likely start off with something that was rated highly by a majority of people. More specifically, perhaps rated highly by people who were 1800 or higher at the time they solved it.

While there is always room for abuse in any situation, I wonder why anyone would be interested in abusing a problem's rating (maybe if they were incredibly frustrated with the problem, or had a vendetta against the problem writer and decided to downvote via multiple accounts? :) )

I agree that a separate vote for "popularity" is good.

For example, one may vote for 3800 in every problem he/she solves, so that in his profile (something like cfviz) there will be more hard problems. What's more, it's hard to guess how people would abuse.

If you are interested in this, there are some relevant discussions on Luogu (in Chinese):

One of the reasons for the increase of abuse in votes for problem difficulties on Luogu, is that Luogu added a new feature that the difficulties of problems a user solved are displayed in his/her profile page.

Thanks, I see what you were saying. It's interesting to see how they detected the cheaters.

How about only accepting votes on difficulty when the reasoning for this difficulty is explained and only from accounts with more than X contests to prevent abuse (prevent getting Amouranth Mod Copypasta as the explanation)?

You mean someone should read these explanations? How cruel.

I think it's OK if someone could read them instead of should read them.

Eh, it's a Chinese OJ, they're used to such work.

I don't mean checking if they're correct explanations, just a brief look that they seem like something written seriously. And if nobody wants to waste time on that... well, that's just the normal situation with no user feedback, nothing of significant value was lost.

It wasn't in the comments exactly — https://mirror.codeforces.com/blog/entry/76129

Just curious, did most problems lose rating? Because from what I can see on 1st and 2nd pages of problemset, I think all E and F problems of Div3 lost some rating.

Mostly ratings not changed, but speaking about changes: problems mostly lost points than gained.

This problem (hard & easy version) have the same rating. What seems a bit weird.

1304F2 Animal Observation (hard version) 2300 x679

1304F1 Animal Observation (easy version) 2300 x895

I tuned some formulas and recalculated the ratings again. Now they are F1=2300 and F2=2400.

In Round 1305, 1305G - Kuroni and Antihype only had 1 solve from Um_nik while 1305H - Kuroni the Private Tutor had 2 solves from tourist and maroonrk. However, 1305G - Kuroni and Antihype had a rating of 3400 (3300 before) while 1305H - Kuroni the Private Tutor had a rating of 3600 (3600 before). I am very curious about what other parameters are used to calculate this problem difficulty.

600F - Edge coloring of bipartite graph had a rating of -1 lmao

It seems I broke the formulas in case of no accepted submissions on the problem. Fixing it now, thanks for pointing it.

UPD: Fixed

-1issue.i have 6 demands who should i approach

Trump

Now both of them are 3500. Actually, I've implemented cutoff like

rating = min(max(800, rating), 3500). I don't think ratings are very reliable for extreme cases like too small or too hard problems.Now I can see rating of any problem that I haven't solved yet, however I didn't choose "Show tags for unsolved problems" in my settings. As far as I remember, I couldn't see it before the update

Thanks for the update.

There is a small bug in the problemset now. The table with the problems contains a header with 4 cells: # (id), name, lightning (rating), and green check (solved). If the problems of the table don't contain rating then the lightning dissapears from the header. For example, in the fist page when it's sorted by rating desc. When it happens, it's not possible to click on it to sort by rating asc.

Thanks, I'll fix it.

UPD: Fixed it.

...And there go my dreams of ever solving a 2000-rated problem in a contest....

Bro, your rating itself is around 2000, meaning on average you do solve 2000 rated problems in contest.

He's just being modest

Could you maybe share the code/formulas as well?

Unlike things like cheating detection algorithms, it doesn't seem to be something that could be abused.

I guess it will be beneficial both for answering all kinds of "how does it work?" questions and for getting input from participants about potential improvements.

MikeMirzayanov I am also quite curious about the problem rating formulas, and it would be great if they were public. It would allow high rated people from the community to improve them. Lots of problem recommendation bots/apps are using problem difficulties so it will be really beneficial if these formulas were the best possible.

Also, as somebody suggested below, It would also be a nice idea to formalize the rating calculation problem.

This is quite funny

same problem!!! Div 3 round #527.

Accepted submission for hard version should be considered for easy version too when only difference between two version is constraints. Because, one who solved hard version will never solve easy version in practice mode . This can make more submission of hard version than easy version.

Issue is with the ratings of the problems, not with the number of submissions!

I think no of solve is one of the fact to calculate rating of the problem.

Now both of them are 2200.

This probably means that Mike has taken the order of solving into account as well, as some of the users solve F2 first and then submit the same solution for F1.

Same is the case with 1256F - Equalizing Two Strings and 1256E - Yet Another Division Into Teams as some people solved F earlier than E during the contest.

Now it looks better: F1=2100 and F2=2300.

According to official standings, 821E - Okabe and El Psy Kongroo had 100 solves and 821D - Okabe and City had 34 solves. But 821E - Okabe and El Psy Kongroo has 2100 difficulty and 821D - Okabe and City has 2000 difficulty.

Now D=2200, E=2100.

It's good, but I think the rating of some easy problems rather became too overestimated. For example, in this contest, the rating of problem B was 1100 before. Now it became 2000. It's quite weird to see the problem having most solvers has highest rating.

I don't know where people usually report bugs (maybe, make a blog or something?) but I guess I've found one and I'll report it here.

You exceeded your quota of 2 distinct recipients per hourI get this message when I try to send a text message in "Talks". I first sent a message to DeadlyCritic and then to pritishn. I didn't try texting any third person apart from these two. I only was replying to their replies to my initial message. In this case, why would it not let me send a message? The meaning of this error message isn't what it's trying to convey? Or is it a possible bug in CF codebase that doesn't let you respond to two different people within the same hour? Never faced this before when I was texting only one person (during an interval of one hour, not that I knew about this anyway).

65A.Harry Potter and Three Spells

It's not that hard, but the rating is 1800.

Mike, just a suggestion, What if you formalise the problem of rating assignment and let the codeforces community solve it for you ?

or there could be two ratings , one by automation and one by community.

Oh, I think that will be good. That's sound great. I hope that day is coming soon.

1326F1 - Wise Men (Easy Version) I think it's difficulty is far below 2600 and far below 1326E(2400).

During the contest, I wrote a $$$O(3^nn^2)$$$ brute force bitmask DP without any thinking. It passed the system test outrageously. I think the difficulty of this method is at most 2000 (because it needs no thinking or observation). Maybe the low AC-ratio in contest is because many contestants didn't believe it could pass so they didn't write it at all.

20C

This is vanilla Dijkstra, should be < 1600

Also 757G - Can Bash Save the Day? is just (see spoiler) , ~130 users solved it , It should be < 2800 but it is 3700 now

centroid decomposition

But from the scoreboard we can see that no one solved it during the contest (ignoring the gray guys at the top of the scoreboard who "solved" it virtually). Probably there was not enough time to solve a tree query problem after also solvig the previous 6 problems.

For any algorithm that looks only at contest-time submissions all such problems are indistinguishable, so it makes sense to give them 3700. And I'd hate the idea of looking at post-contest submissions because they have a lot more problems. If a problem has simple but hard to come up with solution, then people will copy it from the editorial more. And some problems have just become famous, featured in some popular tutorials etc.

You have to factor in both in-contest and post-contest submissions or precisely this happens — you get a 3700-rated problem that definitely isn't that hard.

I'd prefer getting a 3700-rated problem that definitely isn't that hard. If you factor in post-contest, you will likely get some other rating that doesn't make sense.

There will always be some ratings that don't make sense, but why would it get worse by adding post-contest results? Look at the examples above, there are some serious nonsense ratings now.

In some of problems many of contest participants fail on system testing , But their solution is true and its really easy , and they will fail on some small case (n = 1) , solving this problem during practice is easy but their rating will be high.

Yeah, that shouldn't be part of problem difficulty rating. Neither should difficulty compared to other problems in a contest because they can be solved as just single problems in a huge problemset.

I come up with this idea:

[2] is for the rating range for problem — like it is likely to be [600, 1000] for 100% attemped

[1] is for the specific rating for problem — like it is 800 for 95% solved

Determined how?

Also how do you count attempts? If someone tries to solve a problem and it's too hard, they won't submit anything. If a problem has an easy fake solution (that will trick people into submitting), a common trap or a tight TL that brings the number of attempts up. But neither of those things changes the actual difficulty of the problem. (Actually in my opinion number of attempts does not show much at all.)

Yes my system I think there are also ones who you fake clone or wont try to submit. But I think the more participants who tried to solve the problem, the more accurate the system will be.

My "attempt value" means the number of coder ones who tried to solve that problem whether it is accepted or not.

https://mirror.codeforces.com/problemset/problem/887/F

I think the rating of this problem will be higher than 1600 a lot.

Agree. I was floored when I saw this problem's rating to be 1600. Originally it was 2800. It was a pretty nice problem and I thought 2600 would be just perject but 1600 LOL

2500 now.

I guess age of problems and ratings of upsolvers are also used in rating calculation. That's probably why we can find some weird difficulty rating distribution on old problems.

.

https://mirror.codeforces.com/problemset/problem/158/A

1800???

That problem had rating 1800 originally.

How/Where did you find the previous rating of the problem?

I didn't find that — just remembered that.

Now 158A=800.

i think this problem does not deserve 1700 difficulty.

I believe that all the problem ratings should be manually set by the problem writer.

This solution should remove the problem of Div 3 problems being overrated, and simultaneously of some problems being underrated.

Additionally, since the problem writer won't get credit for solving the problem in contest, he gains nothing by "boosting" the rating.

Additionally, Mike and others at HQ can always double check these ratings to verify their legitimacy.

No, that's a very bad idea according to me, the stats say the best about the problem difficulties. Authors can overestimate/underestimate problem difficulties because they can't see other approaches. However bad the formulas maybe, due to real data from the contest, they will always be better than what you suggest.

Not always, e.g. if a lot of people try a problem because it has a lot of successful solutions, there's a runaway error effect that can easily exceed a reasonable author's estimate. It's just hard to say if this ever happens.

Also, about rating, If we choose to hide tags for unsolved problems, it should show rating of the problem. It would be better if just problem tags are hidden and not the rating.

It is already that way in the problemset page.

This F problem from a div 2 contest has a rating of 1600. But I think the difficulty of the problem is greater than 2000.

not a pleasant surprise when you call the command ;gitgud -300 on discord :P

Can you add minimize/maximize button on problem tags such that we could only see that if we wish to.

Am I the only one who wants problem ratings to be abolished?

If anyone wants to view previous rating of problems, I had saved all problems' difficulty till 2020/1/5: link

https://mirror.codeforces.com/contest/1307/problem/F

Does this problem really has 3200 rating? Seems like too much

Now 3300 :)

[solved]

Have you considered a Machine Learning model to do the ratings? Obviously, you have lots of problems to train it.

What would you use as ground truth for training? Asking people to estimate the difficulty?

He is asking people to spot the bad ratings anyway. Might as well use them to train the model.

Might as well use that to set ratings manually. After all, the main limit is how much you're able to trust contestants' input, not the amount of this input — if you're putting full trust into that, let people vote for ratings. Any reasons why not to do that are also reasons to not use it as ground truth for training.

Neural networks are good if you want to generalise from, say, 100k user labels in a huge input space to (in the long run) even billions of automatic labels. Not so good if you want to generalise from, say, 100k labels for 10k different inputs to 200k labels for 20k different inputs.

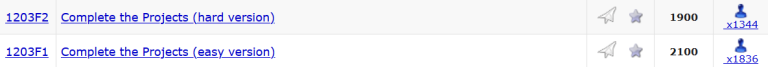

1203F1

1203F2

Bruh according to the new ratings this problem is easier than this one from the same round, which is totally bogus.

Mike, I am the owner of codeforces.ml (another mirror site for Chinese users). I have tried many other ways (talks, emails...) to get in touch with you but in vain. I hope you can see this comment and check the email which I sent to your Gmail on Apr.4th. Thanks a lot!

I know this comment is not suitable in this blog, but I don't have other means. Don't downvote me so hard :(

The problems 678C - Joty and Chocolate and 678D - Iterated Linear Function where both downrated to 1500. While C is fairly simple I was not able to solve D even after reading the editorial.

I think it should be less than 1600 888A - Local Extrema

Now 800.

I noticed that after this second round of changes, this https://mirror.codeforces.com/problemset/problem/1329/B problem is now rated 1700 (I think it was 1900 before), which is even less than https://mirror.codeforces.com/problemset/problem/1329/A problem (1800). However, it seems like more people solved 1329A than 1329B (I didn't explicitly count this though). Maybe basing purely off of solve count is not the perfect way to do this, but it is still reasonably accurate. I also think that the main reason 1329A is rated so high is because a lot of people (including some very high rated people) failed system testing on it, and that is not ideal because many people probably would have caught their mistake if the tests were full feedback, so this does not mean the problem is hard. Maybe the formula could be changed so that those who failed system tests are weighted more towards solving the problem or something?

(I also think that 1329B was a lot harder than 1329A, but my opinion isn't very relevant)

1244C - The Football Season

I dont sure, but in my mind, this problem should be 1700/1800. I have a theory, that this problem has 2000 because of weak pretests. Does formula use FST number?

MikeMirzayanov Should this problem be rated 2900 ?? https://mirror.codeforces.com/contest/86/problem/D

It is standard implementation of MO Algorithm. Earlier its rating was 2700.

"standard implementation of MO Algorithm."

Standard to what?

He told he uses only participants for rating calculations. Pretty sure MO wasn't standard back then. My reasoning behind this is most MO tutorials link this problem so this problem came before those MO tutorials. In the contest only 5 people solved it. Even reds failed to solve it.

https://mirror.codeforces.com/contest/1183/problem/E (easy version) has 2000. https://mirror.codeforces.com/contest/1183/problem/H (hard version) has 1900. Both problems have ratings 2000 and 2200 respectively prior to re-calibration.

Are the ratings final now or still being changed?

238A - Not Wool Sequences is rated as 1300.

From my point of view the difficulty is more near to 2300 than 1300.

Basically same is true for 286A - Lucky Permutation, it is rated 1400.

I just submitted 238A in 3 minutes after clicking your link, so I definitely wouldn't consider it 2300, haha (I generally find 2300 problems very tough).

I guess this also shows ratings are pretty subjective from person to person, and only valid statistically.

I worked on that problem two month ago, I kind of copied the solution from the tutorial back then, just did read the problem again...

And still be not able to understand it. Maybe it is bad math skills.

The way that I thought about it is considering all the prefixes' XORs instead. If you create a sequence of values for these, there's a bijection to sequences of the $$$a_i$$$ themselves.

If you look at constructing a sequence of prefix XORs, now it becomes as simple as "don't have any 0 and don't have any duplicates", so the answer is $$$(2^m-1)(2^m-2)\cdots(2^m-n)$$$ multiplying $$$n$$$ terms.

My submission: 86723498

For $$$a_1$$$ we may choose $$$2^m-1$$$ different numbers. For $$$a_2$$$ same restriction, but additionally $$$a_2!=a_1$$$, so there are $$$2^m-2$$$ posibilities.

Then $$$a_3$$$, same pattern again it must be different from $$$a_2$$$ and different from $$$a_1\oplus a_2$$$.

$$$a_4$$$ must be different from $$$a_3$$$, from $$$a_2\oplus a_3$$$ and from $$$a_1\oplus a_2\oplus a_3$$$ and so on. This sums up to $$$(2^m-1)(2^m-2)\cdots(2^m-n)$$$ if and only if all those therms are distinct. And it turns out they are. Why?

The tutorial constructs some b[], and says: "So we know that all elements of b should be different." How does this work?

Imagine $$$a_6 \otimes a_7 = a_3 \otimes a_4 \otimes a_5 \otimes a_6 \otimes a_7$$$. Then you can rearrange this to $$$a_3 \otimes a_4 \otimes a_5 = 0$$$.

In general if $$$a_i \otimes \cdots \otimes a_k = a_j \otimes \cdots \otimes a_k$$$ (where $$$i < j$$$), then you have $$$a_i \otimes \cdots \otimes a_{j-1} = 0$$$.

Same for 286A, but to be fair, I just copy/pasted my code from a previous problem (612E - Square Root of Permutation), pretty funny that this problem is literally a special case of the other problem (I copy/pasted the solution and replaced only the input-reading part).

I guess this problem is overrated 678C - Joty and Chocolate not worth 1600 it is worth of 1000 only.

https://mirror.codeforces.com/problemset/problem/115/A this is div.1 A and div.2 C problem.In spite of the fact that it has a simple bfs idea, but maybe it can cost a little more than 900!

MikeMirzayanov, The ratings of problems in Codeforces Round 639 have been over-inflated due to less number of in-contest submissions due to long queues.

E.g. 1344B - Monopole Magnets : This problem is hardly 1800 but it is rated 2000.

1344C - Quantifier Question : This problem is rated 2600 ! Seriously? It was a beautiful problem but in my humble opinion, the maximum possible difficulty of this task is 2100. Please look into it.

Yeah, you are right 1344B was quite easy.

. Educational 34 D 2200 x2327

. Educational 34 E 2200 x836

. Educational 34 F 2200 x367

https://mirror.codeforces.com/problemset/problem/518/A div2 A is rated 1600

https://mirror.codeforces.com/problemset/problem/540/C this question's difficulty certainly should be less than 2000!

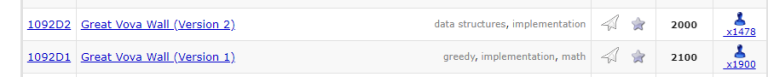

Difficulty of Subsequences (hard version) is lesser than Subsequences (easy version).

https://mirror.codeforces.com/contest/97/problem/E is rated 2200. It should be higher. Nobody solved it in the contest.

I think this problem (207B3) is quite hard. I think it cost 2700 at least. No matter what I think, it should harder than 207B2

1360G and 1360H have the same assigned difficulty, even though G had about 50% more in-contest solves than H. Perhaps one of them should be adjusted?

Yesterday's Div2 problems are highly overrated in difficulties: 1384B1, 1384B2 and 1384C have been solved by 846, 307 and 2260 Div2 contestants (rating < 1900) in contest time. But their problem difficulties has been set as 1900, 2200 and 1700 respectively.

MikeMirzayanov The problem 1603F - October 18, 2017 is a div1F and had only 3 solves in contest, yet its difficulty rating is very low at 2700. For comparison, the div1E in the same round was rated 3200 despite having more solves.

The likely cause is that rainboy solved this in contest, and he is of course underrated on purpose because he always attempts problems in reverse order.