Hi Codeforces!

I've recently noticed a lack of simulated annealing tutorials, so I decided to make one. It all started when I was trying to "cheese" 1556H - DIY Tree after the contest. In general, simulated annealing is a pretty niche topic, but it can sometimes lend you unintended solutions for very hard problems. It's also very useful in contests like Google Hashcode, where you can add simulated annealing to an already good solution to make it a lot better. Simulated annealing's name and terms are derived from physical annealing, the process of letting metals or glass cool down and harden while removing internal stresses.

An Overview

Simulated Annealing is an approximation algorithm. It's generally useful in problems with low constraints (i.e. $$$n \leq 50$$$ or $$$n \leq 100$$$) where you need to find the minimum/maximum of something over all possible states (and there are usually way too many of them to check). In general, it's good at finding the global maximum/minimum of a function. For the rest of the blog, I'm going to assume that you want to find the minimum of the function (the maximum case is equivalent).

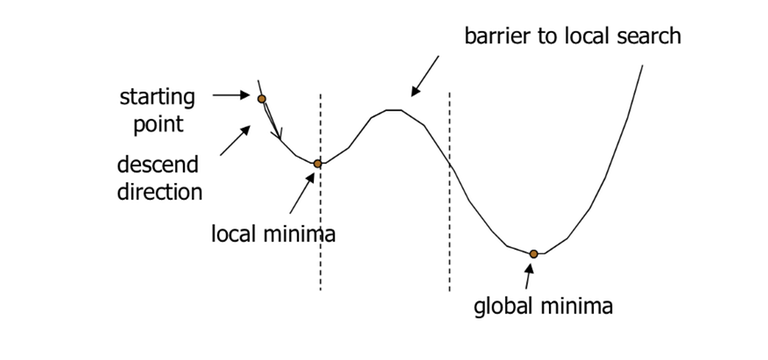

A similar algorithm, that is easier to understand, is called hill-climbing. The way hill-climbing works is that you start with a random state, you find any neighbor of the state, and set the current state to that neighbor if the value of the neighbor state is less than the value of the current state. A "neighbor" of some state is another state that you can get to by applying some small transformation to the previous state (I'll explain more about neighbors in the future). If you look at the image down below, the hill climbing algorithm would look like this:

- Start at some random $$$x$$$-value

- Change $$$x$$$ by either $$$-1$$$ or $$$+1$$$ (pick the smaller one). In this case $$$x-1$$$ and $$$x+1$$$ are the neighbors of the state.

- Repeat until both $$$x-1$$$ and $$$x+1$$$ are larger.

The issue with this algorithm is that it often gets stuck in a local minimum, instead of a global minimum.

Simulated annealing helps fix this issue by sometimes allowing a step to a worse neighbor, which could allow one to reach the global minimum, even if it isn't the same as the local minimum. At each step of the algorithm, you consider some neighboring state and probabilistically decide whether you move to the next state, or keep the current state. You repeat this step until you exhaust the time limit. The crux of simulated annealing is the acceptance probability function, which determines whether you'll take some step or not, depending on the temperature (a value that determines how big of a "bad" step you are going to take). In other words, the higher the temperature, the worse of a state you allow the algorithm to go to (as determined by the acceptance probability function). As iterations progress, temperature gets lower and lower, which causes the algorithm to take smaller and smaller steps until it converges at a global minimum.

Here is some pseudocode:

Let s = random(state) //get's any random valid state

Let best = s

while elapsed_time() <= time_limit:

Let t = temperature(elapsed_time()/time_limit) //returns temperature given the percent done

Let next = neighbor(s) //neighbor of a state

if value(s) < value(best):

best = s

if P(value(s), value(next), t) >= random(0, 1): //P -> probability of acceptance function, returns the probability that you'll move to the next state given the value's and the current temperature

s = next

print(value(best))

In the following sections I'll describe what each function means, and common examples of each function, as well as properties that each function should have.

Neighbor Function

A neighbor function is just a function that takes in a state, and returns a random new state after doing some transformation to the previous state. An important property of the neighbor function is that it doesn't change the answer by too much. Let's start with the example of TSP. An example of a valid neighbor function for TSP is where you swap two the order of two random cities in a state to get a random neighbor of the state. This only affects the values for those two cities, so the answer doesn't change much. Another important property of the "neighbor function" (i.e. how you decide what your neighbors are) is that it should be possible to get any state to any other state in a short number of steps. In the case of TSP, it only takes a linear number of swaps to get from any state to any other state, the hard part of the problem is deciding which swaps to take (which simulated annealing takes care of).

Note: The second condition is important for the accuracy of simulated annealing. For example, simulated annealing wouldn't really work well on a 2-d graph (like the picture I have above — that was purely to demonstrate the existence of local minima), as it takes a lot of steps to move from one end of the graph to the other.

Temperature Function

The temperature function decides how "willing" the algorithm will be to move to a worse state. In other words, if temperature is 0, you'd only ever move to better states (which becomes the hill climbing algorithm). If the temperature is infinity, you'd move to any state, regardless of how good/bad it is. In simulated annealing, you want the temperature to go from something high to something low. Initially, you want to be able to explore the different possible states, so you have a better chance at reaching the global minimum. At the end, when most of the time is used already, you want to keep taking better and better states, hoping that you're already close to the global minimum. There are 3 common temperature reduction rules:

- $$$t = t \cdot a$$$

- $$$t = t - a$$$

- $$$t = \frac{t}{1 + a \cdot t}$$$

Where $$$a$$$ is just some constant that you choose.

The most common one in my experience is the geometric one (the 1st one), where you start with $$$t_0 = 10^9$$$ or $$$t_0 = 10^5$$$ or some other high value and set $$$a = 0.99999$$$.

Acceptance Probability Function

This is the crux of simulated annealing. The acceptance probability function is the function that determines the probability of going from one state to a neighbor state. Let $$$P(old, new, temp)$$$ be the probability from transitioning from a state with value $$$old$$$ to a state with value $$$new$$$ if the current temperature is $$$temp$$$. If we were to use hill climbing, the function would look like this:

def P(old, new, temp):

if new < old:

return 1.0

else:

return 0.0

This is because you never transition to the next state if it's value is worse. The most common function that's used for simulated annealing is the Metropolis-Hastings Algorithm. This changes the acceptance probability function to:

def P(old, new, temp):

if new < old:

return 1.0

return exp((old-new)/temp)

Now, if the new state is worse you still might transition to it, which makes it more likely that you reach a global minimum. Another important thing to note is that as the temperature decreases, the probability that you transition to a new (worse) state also decreases, which is exactly what we wanted.

Example Problems

- Try implementing TSP

- USACO subsequence reverse

- DIY Tree

- AHC001 A suggested by TheScrasse

Thanks for reading! This was my first tutorial blog, so let me know if there's anything that should be added/changed, I'm always open to suggestions. If you have any other example problems, let me know and I'll add it to the list.

Additionally, thanks to moo. for proofreading the post!

PurpleCrayon moo. orz!

ORZ! I've been trying to learn simulated annealing and apply it to CP problems for so long. STO PurpleCrayon OTZ!

What is 'a' in the temperature function?

Also, 'Another important thing to note is that as the temperature decreases, the probability that you transition to a new (worse) state also decreases'. Shouldn't it be — 'as the temperature increases?'

$$$a$$$ is just some constant, as I mentioned later I usually use type (1) in the temperature function with $$$a = 0.99999$$$. I'll clarify this in the blog post, thanks.

No, it's correct. Notice that

old-newis negative whenold < newin thePfunction, which means that decreasing temperature would actually decreaseexp((old-new)/temp), which decreases the probability of transitioning to a worse state.Ohk thanks

Related problem: ahc000_a (and maybe problems from other AHCs)

Thanks, added.

There was a task in a local IOI-like Russian competition which can be solved by simulated annealing (among some other methods). You can find it in the Gym here. The statement is only available in Russian, but Google Translate seems to translate it correctly.

And in case someone is stuck, check out my submission (104988584).

My favourite story about using annealing in algorithmic contests: link

Thanks for a great blog on simulated annealing! How do you handle overflow/underflow (in the computation of

exp), and how do you ensure that the temperature becomes small enough so that the probability of getting to a bad solution eventually goes to zero? Is there a more systematic way of doing this, or is this done on an ad hoc basis?Whenever I do simulated annealing, I keep in mind the order of magnitude of the difference in the values of the two solutions, and adjust the initial temperature and $$$a$$$ manually, so that something like this holds: $$$|(old - new) / temp_{final}| \approx 10$$$, which is probably not the best thing to do, but works in many situations.

Yeah, that's essentially what I do as well. In my experience, it seems to work well, but I'm not sure if there's a more systematic way of doing it (so if anyone reading this knows a better way of doing this -- please let me know and I'll add it to the blog).

What's temp_final? The expected temperature by the end of time limit?

Yes, and that's to ensure convergence.

For me I sometimes do $$$t = t^a$$$ and the lower bound of $$$t$$$ is $$$1 + 10^{-7}$$$.

Does someone have a working solution using simulated annealing for USACO Subsequence Reverse? I'm finding it difficult to get past WA/TLE on cases 7, 9, and 10.

here is the code of it by 300iq solved using simulated annealing : https://pastebin.com/gBShL4MC

I think this task is also good for this topic. But main problem here is that for each test there is no to much time. However, I managed to get wa 7 (don't know if it is good, but I am not so good at this algorithm, so I think yes). I think it could be good to make part 2 of this blog with some cases, because, as you said, there is not very much blogs about it (in fact I found only your blog on Codeforces)