Hello, community.

Today DeepMind announced a new achievement of AI. And it is directly related to what we love — programming problems.

They have developed AI capable of solving some competitive programming problems! The future has arrived.

You should read solutions of SelectorUnlimited, WaggleCollide, and AngularNumeric solutions. All solutions are written automatically. The only input for writing solutions is a problem statement in English.

Details can be read at the link https://deepmind.com/blog/article/Competitive-programming-with-AlphaCode

Apparently, if these accounts would take part in real competitions, then their rating would be about 1300.

Terminator is ready to take part in Codeforces Round #770 (Div. 2)

In 1997 Kasparov played against (and lost) the supercomputer DeepBlue. Perhaps we will be witnessing a confrontation between tourist and AI in near future. What do you think?

AI is such a noob

At least its better than me....

at least you can understand the statement

Hope that robots do not come across your comment when they start ruling the world.

AI vs Pupils, Noobies, Specialists

OHHH! worthy opponent.

And it's also violating CF's rules, alt accounts aren't allowed, this bot has two of them.

So the bot is ban ed !

It is too interesting, I wanna see the initial success of this Terminator. Please, Inform us about the handle of it. I just make it my friend :) Thanks

As AI improves, it will achieve higher rating. More data -> More Efficient

Top Rated Leaderboard in 10 years when the AI all become LGM

i suspect the one in the middle is also AI with tourist pfp

i suspect that your second revision is something you should have kept to yourself

sorry, i mixed up websites

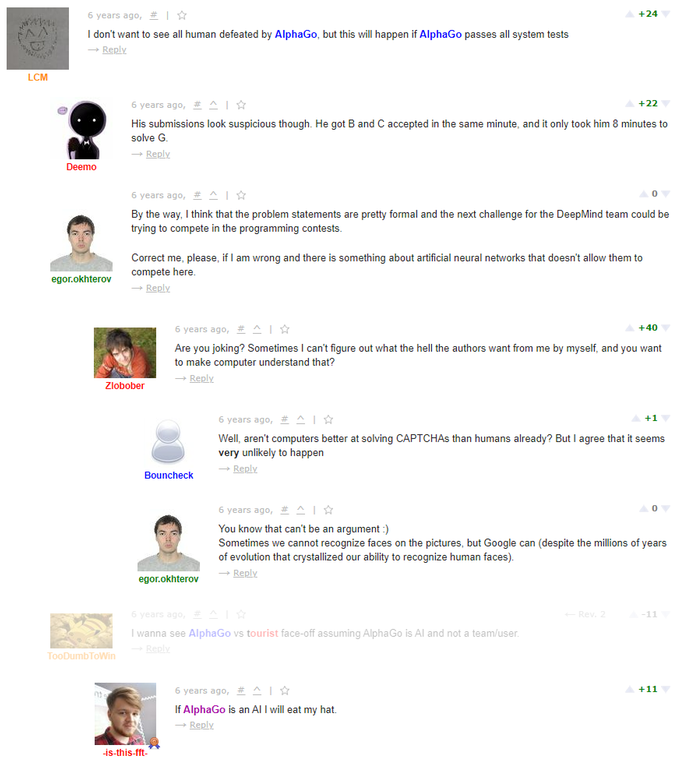

I still cannot forget when 5 years ago AlphaGo (Another AI developed by Deepmind but playing Go) demolished me and Lee Sedol (we are both top Go players). Now it seems like AlphaCode is invading CP. I sincerely hope that tourist can fight back for mankind. Best wishes.

So you are real Jie_Bao?

In case he loses,we also have MiracleFaFa

Time to private all our github repos

As if that will stop Github from selling that.

We should be able to make our submissions to CF private too.

I will post the same comment when i become 'grandmaster'.

Curious mind wants to know

The AI ( WaggleCollide ) which does not know the language it is writing the code, how can it beat tourist? tourist

Of course it can't beat tourist.

We will be witnessing more cheaters.

From the codeforces rules:

Solutions and test generators can only use source code completely written by you, with the following two exceptions:

If this AI was publically available, using it would not be cheating by point 2.

Maybe then it would be time to introduce more constraints to this rule, i.e "don't use AI to cheat"

That being said, no idea how it can be enforced, maybe by requesting google to create an AI to tell the likelihood of a code being generated by AI.

For me seeing AI progress would be really interesting. And while in chess you can bruteforce all combination of moves for some depth, I can't see how it could be possible to bruteforce tasks harder than div.2 D. So if tourist would decide to code an AI it most likely would NOT overtake him in rating race, hence no point im cheating.

Also, returning to chess... Lichess (platform to play chess online, that also has rating), just marks bot accounts as bots. So better alternative than banning AIs imo is allowing bots to participate in rated comtests, but as "half-official" participants. So their results will not be used to calculate rating delta of other participants, but they would still get (or lose) rating based on projection of their results on real results.

I think letting bots to participate in contests would allow Codeforces and competitive programming overall become more popular.

I meant participants using AI for their contests,

Also same in chess, lichess and chess dot com do ban users who cheat via ai

You can programm a chess bot knowing only how do figures move and what check and mate are. To code AI capable of solving CP tasks of high-level you need to be on even-more-higher-level. Did chess players become extinct after AI beated Kasparov? No. In fact it became much more popular.

Also having an AI capable of solving tasks, we become closer to an AI that is capable of creating tasks. Imagine new CP format? Like 1v1 duels. Or rated contests every day. Sounds cool.

But anyway — having 300-more rated friend can lead to cheating just using his solutions. Also there were a bunch of posts about indian Telegram chats, where participants leaked solutions to each other. So it does not really give you new horizons to cheat. But new horizons to CP? Hell yeah

I don't understand what are you even on about, I just said there would be need in future to say "don't use ai to cheat" I never mentioned restricting the AIs themselves. Can you read? Or do you just write huge unrelated paragraphs, or are you replying to me by accident

Allowing bots to be in codeforces at all sounds like a bad idea to me, cuz very soon people will start blogs complaining people using it in official competitions — though i guess you can check for copying code?

Probably they will train some model which will classify "deep-fake" from real one).

AI break rules in codeforces

Or before every submission we make, we need to verify ourselves as human xd

then what about time matters?

Everyone would have to do it so it doesn't really matter

train to click it faster than anyone xd

bruh

I can't see the font too lol

We humans can't read the font well lol. Furthermore, if AI can read the font, will this be the end of reCaptcha?

It might sound fun and games now, but if, in reality, AI comes to dominate programming, the developer world could be hit very hard. Thousands of people work as developers around the world; with many more joining the wrokforce every year. This population could be very badly affected by such improvements.

Sorry if this comment comes off as slightly less humorous. Maybe I am a grey programmer, that's why I'm so scared :(

Today you are solving problems,in future you will develop AI to solve problems.Nothing replaces the programmers.

Unless there is AI capable of developing AI to solve problems.

https://en.wikipedia.org/wiki/Technological_singularity#:~:text=The%20technological%20singularity%E2%80%94or%20simply,unforeseeable%20changes%20to%20human%20civilization.

Hope there is a theorical proof about "No AI is capable of developing a better AI" :P

After all, aren't we just some really fancy AI's? Well, for us, it took some time to become as evolved as we are now, but as it seems, AI will evolve much faster. We shouldn't've meddled with all this

So you can capable of developing AI developing AI to solve problems.

But AI can program more AI later. It's like self-reproduction and need of humans will keep reducing.

Human's will do more productive work then

And what faction of humans you think do very productive work in the industry?

I always thought that the key ingredient of true machine intelligence is self observation. That is it has to be able to recursively track it’s thought process and control its direction instead of mindlessly going through the whole search space.

Well... Unfortunately ML currently is based on trying to find global minimum of function.

Imagine yourself in a mountains, trying to find the lowest part of the ground. You may think that you found one, but it could be just the lowest part you could see from a peek you previously where. Do you think that observating your previous progress woild lead you to the best place? No, it would not. So either check every [opposite to peak] place, or pray on a good decisions when you start (which are based on random)

Same thing in ML: Unfortunately good parameters, that are passed to an AI before learning, can not be determined before learinng. There are some ways to generate better datasets, but it is as far as you can get. You can't learn AI to do smth (unless you know algorithm of how to do it by yourself), you can only minimize difference between some mythic optimal algorithm, and algorithm that your AI uses

I guess the problem with the current AI is more fundamental. The path we took to just optimising some function using back propagation is fundamentally broken IMHO.

We don’t do back propagation in our brains. We learn things instantly, we don’t do silly classification mistakes, we don’t have catastrophic forgetting and so on. So many clues screaming at us that we’re going the wrong way.

I think that the industry gold standard is taken for granted and no one dares to ask how far can you go with the current NN architecture. Either the problem is in funding or people are blind to the problem that the current architecture will definitely not lead to the true AI.

The problem is — no one knows how do we get to classify things for example. I haven't checked, but they say our brain creates and breaks neuron links, almost similar in how they do in ML.

But one thing for sure — no one thinks that what we have now is gold. Unfortunately, aome things are impossible to implement. We use base-2 notation, while base-e notation is much more efficient. Why? We know a good way to create base-2 systems, and it does the job.

People are lazy, they will only progress until their time is going to pay-off. Would you invest a 1m$ to get 1$ every day? No, it takes ~3000 years to get it back. And there is no guarantee in gwtting interest from investing a lot of time into searching a new ways how to do it, plus everything ahows it is most likely... impossible. So we can only hope to invent a little optimizations atm

Sure we do know now.

The most prominent company that IMHO took the right approach is numenta.

I will not go into detail, but the general birds eye view.

The neurons in the neocortex are organized into modules called cortical columns. Each column is made of 6 layers. You can think of that column as a self contained entity, like a small brain. We can stitch these modules (columns) together in a modular fashion. So, you could add new module in the brain and it will take it's role and have some new functionality.

Each cortical column performs recognition of the sensory sequence input and based on that input it casts a vote for nearby cortical columns and sends motor commands.

Well, that was too much info I guess.. sorry if I overloaded you with information =)

Really? I thought the neural networks were based on actual structure of neurons in the brain? And aren't the giant models relatively decent at 1 shot tasks after pretraining (which is similar to what humans do)?

Artificial Neural Networks is a class of algorithms "loosely modeled" on neurons in a biological brain. Backpropagation is even more removed from how the actual brain works.

Same feelings here, those ML trends leading AI development nowadays seems to me misleading, sometimes I think it is on purpose...... :p

It's called renforcement learning.

Yes, let's stop this madness before it get's out of control. The programmers who created this think that they are irreplaceable, but they would be the first ones to get replaced. Let this comment gather downvotes, but there is a limit to what humans should automate. If the world continues like this, we are moving to a sad, sad reality — one far worse than covid. This AI should be banned from participating. I don't want to be in any way helpful in it's development! And other programmers consent must be valued too...

Yes, let's stop this madness before it get's out of control. The programmers who created this think that they are irreplaceable, but they would be the first ones to get replaced. Let this comment gather downvotes, but there is a limit to what humans should automate. If the world continues like this, we are moving to a sad, sad reality — one far worse than covid. This AI should be banned from participating. I don't want to be in any way helpful in it's development! And other programmers consent must be valued too...

Yes, let's stop this madness before it get's out of control. The programmers who created this think that they are irreplaceable, but they would be the first ones to get replaced. Let this comment gather downvotes, but there is a limit to what humans should automate. If the world continues like this, we are moving to a sad, sad reality — one far worse than covid. This AI should be banned from participating. I don't want to be in any way helpful in it's development! And other programmers consent must be valued too...

Yes, let's stop this madness before it get's out of control. The programmers who created this think that they are irreplaceable, but they would be the first ones to get replaced. Let this comment gather downvotes, but there is a limit to what humans should automate. If the world continues like this, we are moving to a sad, sad reality — one far worse than covid. This AI should be banned from participating. I don't want to be in any way helpful in it's development! And other programmers consent must be valued too...

Come on, does AI solving math problems replaced mathematicians? Did site constructors replace programmers? Or, maybe, chess AI fully replaced, chess players? Or do you, maybe, think, that AI will be able to beat tourist in codeforces competitions? Of course not, at least not in our century. AI is not even near being that smart to deal with unusual situations which constitutes the work of a programmer. AI can work with given actions only, it does not really "learn" something, it just simulates the mind without really knowing what is it doing.

I think you're underestimating the rate at which a breakthroughs can happen. Artificial General Intelligence could be developed in a very short time frame by having a "seed AI" that writes new AIs that write new AIs, etc. The only thing we need is a seed AI that has an "r-value" greater than 1.

I'm not saying that this is what will definitely happen, but I think there is a decent chance that AI smarter than humans in every domain of interest gets developed in the next 50 years. It definitely should not be shrugged aside.

Has calculators replaced mathematicians? No.

At some degree, automated systems such as AlphaCode will be extremely useful to automate some mundane task (for example, creating user interfaces from UX diagrams) but some other areas, much more deep creativity is needed and indeed, the day that an AI system is capable of fully replacing research engineers we will have accomplished the so-called "AGI" or "Artificial General Intelligence" in such case, we all going to be living in a renaissance :)

Lol you're not comparing calculators to AI's... they're completely different concepts, it's like comparing deepblue with say alphazero or sth.

This is market place thinking: automation is equivalent to AI. This is why AI is popular, lots of automation is now counted in AI catalogue

Not saying it won't happen, but I think we are still a long way from AI dominating programming. In the real world most programming problems are far less well specified than in competitive programming, and much of programming is more about working out what the customer actually wants; rather than about implementing clever algorithms.

Having said this, AlphaCode and similar AIs could quite quickly become very useful tools for those areas of programming that are dominated by algorithm design, and could require some rethinking of what skills are important for developers.

Remember also that there have been many developments over the years that have made developers more productive (and have changed the skills developers need). These include new languages and libraries, much faster build and test times, and easy access to open source code through the web. In spite of this the demand for developers has increased, almost continuously, for at least the last 50 years.

Yet when blue collar workers are hit by the same phenomenon? Crickets chirping.

Tourist v/s AI .............would love to witness it!!!

What about AI versus the whole developer community? The stakes are high for one party; if the developers lose, they'll be replaced for ever. Are you interested to witness this match?

I would hate to witness it... This match happening would be the darkest day in the history of programming.

Even if our beloved tourist wins, people like you and me still don't have a place in the world.

Agree with this, no matter how many downvotes it gets.

I feel I am not as good as an AI :((

F for grays

Press F for green and grays*

For now...

and for cyan as well :)

and eventually every color of spectrum

They should make a documentary like Alpha Zero, where tourist will be invited for solving problems against this AI.

Lol for sure tourist will win this

Has my data been used for training this AI in any way and will Codeforces users data be used without their consent for training this AI?

Probably not. They might use it if you get better instead of making pointless comments and crying about AI replacing you though.

About the second thing, probably yes because you're allowing anyone to see and access your code by submitting on this platform, it's their right to use it.

You have already given your consent. Please read https://mirror.codeforces.com/terms

This is amazing, but I still seriously doubt we will see an AI solve problems much harder than this consistently any time soon (in the next ~20 years at least). I wouldn't be surprised if it manages to solve some hard problems, as sometimes the difficulty comes from just having to implement a lot of stuff. It will be interesting to see what kind of problems the bot finds easy. Personally I hope it is very good at geometry problems, so we can get rid of those 8)

My impression is those can't even coord/trig/complex bash math contest geometries yet?

My guess is the best chance might be `template' data structure problems, e.g. things doable with templates like lazy propagation / LCT / suffix arrays. I'm really not a fan of the idea of 'easy data structure problems'.

Lazy propagation might be possible, but for the others on your list, the lack of training data likely makes it impossible. There are probably less than 1000 solutions on CF that use LCT. Further, most of those use very different templates, and the AI has no way of knowing they all are the same data structure.

But yeah, I agree that the bot ridding us of geometry is likely a pipe dream ;_;

But yeah, I agree that the bot ridding us of geometry is likely a pipe dream ;;_

This dream definitely become closer to be true!

I feel parsing math contest geometry problems is a much bigger problem than actually solving them.

When I was doing Olympiad math and was much worse off at synthetic geometry than at bashing geometry problems, I had a very mechanical approach to bashing, which I am pretty sure can be formalized into a system that a theorem prover can easily work through, given enough time. Even if it involves proving certain non-trivial inequalities, I am sure that the computer-aided inequality proving methods that are (or at least used to be) so popular among math olympiad students in China and Vietnam would be really helpful.

Such approaches sound hard for purely synthetic solutions though, since a lot of the times we need constructions, and constructions are super hard to come up with. But still, I think if AI can solve competitive programming problems, it is not too far-fetched to think that it stands a chance against a usual IMO G8, since in my experience (of getting at least as good at synthetic geometry as a usual "strong" math olympiad contestant in 2016), it all just boils down to learning how to apply the same 500-1000 facts in Olympiad geometry in a well-defined systematic manner, of which you probably need only 100 in most cases.

Actually it depends on the way you attack such a problem. If directly one picks up the hammer of bashing and treats the problem as a nail, it won't work because the problem is more than that. Approaching a (MO, CP is very very different) geometry problem mainly involves 2 things:

1) Realizing any well known configuration or setup.

2) Simplifying that configuration with low-tech results or using hi-tech results to kill it.

As for the construction stuff, they are quite nontrivial to come up with and I don't think a bot would be able to currently deal with explicitly coming up with a tricky construction. And NO NO NO. It is very far fetched to think that a bot which can do CP can solve IMOSL G8s. Please think about what you just said.

As far as bashing is concerned, I am pretty sure most problems succumb to bashing. At least when I was not good at geometry, I recall having successfully bashed all of the close to 1000-2000 geometry problems I tried in a very mechanical and mindless manner (using at least one of complex numbers, trigonometry or one of barycentric/trilinear, Cartesian, and tripolar coordinates). These problems also include pretty much every high numbered IMO shortlist geometry problem, and problems from TSTs of countries like USA, China, Vietnam and Russia (which are traditionally strong in geometry and have much harder geometry problems than others). So I feel that your point about bashing is kind of baseless. Of course, I am not good enough/don't have enough time to actually write up a solver based on my experience, but I am familiar enough with some SOTA literature to claim that this is definitely not beyond the reach of such techniques. It is literally just symbolic manipulation + equation solving in most cases, and if you have equations you can't solve algebraically, you would need to combine them in a certain manner, that I am sure is possible, given the recent OpenAI result (or the inequality solvers that I alluded to in the previous comment).

I had already mentioned earlier that constructions are super non-trivial, so in general it is not possible to systematically list out all possible constructions, but for most constructions that a human competing at, say, the IMO bronze/silver level can think of, I don't think AI can't be systematically trained by adding in a ton of inductive bias (Of course, it is theoretically possible to go about looking through constructions by enumerating them in lexicographical order, but it's too impractical, so I sort of agree with your point).

If a person, who is as bad at thinking as me, can train enough to be able to solve, at their peak, pretty much any IMOSL geometry problem with a high probability in less than an hour or so, or get to a couple of completely new triangle centers interesting enough for the centers to warrant a mention in ETC back when it had much fewer centers than it has now, I am pretty sure that the learning process is super mechanical. In fact, verification of triangle centers is a very well-known problem and has been dealt with at length in ETC. Knowing the fact that there are AIs that can actually recognize SOS expressions in inequalities (for instance, refer to this blog by OpenAI), I really don't think that given a large enough knowledge base, it can't figure out how to solve MO geo problems. This takes care of your argument about "realizing any well-known configuration or setup" and "simplifying that configuration with low-tech results or using hi-tech results to kill it". If you put in the effort to expand their database of statements to include something like the whole Kimberling Encyclopedia of Triangle Centers and/or Bernard Gibert's list of higher order curves, do you really expect a heuristic search to never be able to find out a solution in a large enough number of geometry problems?

Edit: Just wanted to add the fact that this thesis exists. Apparently, some problems at the IMO shortlist level were also computer generated, and some of them got accepted into national Olympiads too.

Edit 2: I also never claimed that a bot that can solve competitive programming problems (specifically referring to the DeepMind AI) can also solve geometry problem. I just said that it is possible to get computers to solve MO geometry problems for you.

oh ok

I Don't think Artificial intelligence is enough for CP.

A wave of pupils can be expected now.

It is very interesting to see how this new AI would solve problems, that require some new ideas. Well, I would definitely be upset if AI would become better than tourist, but I am afraid it is inevitably.

What is “a new” idea?

Wow this is amazing

I've read some solutions of these three users, and some of them don't look like they've been written by a bot. They seem to use "human" code templates, defines and stuff like that.

Maybe the explanation is that the network was trained on solutions from editorials, and sometimes copies these templates just because they were written in the training data. Though it seems strange that the AI sometimes doesn't understand the code it is writing and blindly copies human templates (I would imagine that the network recognizes the problem and builds a solution which is automatically converted to code, but maybe the architecture is different).

It's also quite interesting how the AI copes with informal statements — legends, stories and stuff like that. I would imagine processing them is much harder.

My guess is it puts a lot of value on input/output specifications and sample I/O.

I was actually reading through them and was wondering the same thing. How these code seem like they are written by a human and not an AI because of structure and templates

Well, one of the pictures in the article shows that solutions are also a part of the training dataset (and I hadn't noticed it before I was writing my first comment), so it explains the "human" templates.

Excerpt from the paper.

Well now there isn't no evidence, there's a bit of circumstantial evidence.

The machine will not outshine its maker

"The machine will outshine its maker, It always had and Always will" ~ Skynet

Tourist Vs AI! Already feeling bad for AI

They said the same thing for alphaGo, funny how that turned out XD

I remember people cheering for Lee Sedol. The consensus was that AlphaGo was going to fail miserably.

Now, we have to quickly solve first two problems :)

It's very amusing to look at the AI's submissions to 2000+ rated problems:

G – Unusual Minesweeper: Not really sure what to make of this? I have zero clue what it's doing or how what it's calculating helps solve the problem. It doesn't even use $$$timer$$$ in it's solution! It looks like the AI wrote a random something to pass the first sample.

F – Quadratic Set: The AI hardcodes small cases and does something random for everything else.

H – Permutation and Queries: The AI understood the problem correctly and translated it directly to code which gets TLE. This one is interesting because I notice the AI does particularly well when the problem gives a clear procedure (i.e. it smashes problems like A – Life of a Flower out of the park), but I wonder how well the AI performs on older beta rounds or ICPC problems with more legends and maybe not the most direct way of stating the problem? Also, why did the AI fail to optimize the solution in this case? There are instances of it successfully implementing something beyond the naive solution. Is it a matter of the dataset not having enough AC submissions to harder problems?

the AI does particularly well when the problem gives a clear procedureMaybe they should test on Atcoder as well.

So the AI is me solving usaco problems...

Given the code shown for Backspace, I wondered if they were counting correct-but-TLE as passing. Then I remembered that said problem had "Preparation: Will not be revealed for now because we care for his/their safety." in its editorial. Sooo either the problem sent to the AI had its original (weak) testcases, or... an AI getting from statements to solutions is still a great accomplishment, but the rating is probably a little inflated.

I will throw out that python as it's seen in cp is a very niche dialect (pypy but no numpy) so the set of python coders who would persist/twist through it I reckon is pretty slim, which means the modal python solution for the AI to pull from is probably 'bad' in a lot of ways (mine included, shudder/clown/cheese/cry)... maybe?

There was also this: https://mirror.codeforces.com/blog/entry/94648 a few months ago... didn't get around to trying it though.

Hmm that Backspace code does look like $$$\mathcal O(n^2)$$$. If they're counting any solution that's correct regardless of TLE, then these results are way less significant than I thought, because kind of the whole premise of CP is solving problems with good complexity and not just brute forcing through everything.

For the "Python to C++" translator, are you perhaps referring to this? I remember seeing this a few months ago and thinking that problems based on "naive -> AC" optimized with data structures are most likely the types of problems AI would be best with since there's a consistent "formula" to them.

I can't find the bot's submission but benq resubmitted the code and it doesn't get a TLE: 144971343

Somehow pop(0) for a list is just really fast in cpython?

That's interesting. I've confirmed that list.pop(0) does really

memmove()the entire list.So... likely that's caused by

memmove()being too fast.these builtin lists are fast in every language, I remember 100k random insert/delete on length 100k arraylist in java took only 400 ms, and that was back in 2016

Also there was one problem which we were told needed some memset to be fast (it was actually n^2 solution lol) and so I couldn't solve it in java since looping and setting to 0 was too slow (eventually I did solve it the right way)

As mentioned in the preprint paper "4.5. Filtering", this AI produces lots of solutions and then filters them by running sample input on them, which make 99% of solutions filted.

So, when the problem is too hard for AI to even produce a reasonable solution for sample input, only the weird solutions (like output the sample output directly or output something happens to meet the sample output) can pass the filtering stage, which explain what we observed for problem G and F.

They also have a nice UI for visualizing where the "attention heads" are focusing on in each problem: https://alphacode.deepmind.com/

It seems that we are going to be defeated by an AI codeforces in a near future.

One question, why?

why not?

AI handle on codeforces

If AI gets good enough (level 2000+) in problem solving, it would destroy all opportunities for average coders.

Only very high rated coders will be needed to monitor/maintain the AI systems and almost everyone below 2000 will face negligence because AI will do what they can. However such future is yet to happen but it poses such potential.

just fantastic!

I think a tourist is a computer, so what is the confrontation of a computer against a computer

It sounds fun but I don't think AI for CP will get huge results like deep blue.The premise of solving a problem is to understand the problem, apparently AI still has a hard time doing this.

That's what 18th-century people initially said about 19th-century technologies

Hope it will break my mind about it

and the same for internet in 90s

Wow, I am still better than AI :)

AI will make problems -> AI will solve problems.

Now we know that all these notoriously bad problem statements were merely a preparation to trick AI.

many people are telling noob to these AI, but can't you see the speed in which they are solving problems?

I just thought about this the other day. Emergence of such systems is inevitable, but also sad. I, for one, really believe in potential of AI such as this and Copilot. It means that in the near future millions of talented people will be forced to drag out a miserable existence or to commit suicide. I think that automating creative, human jobs while we still live in capitalist society is just unethical. Uncle Ted had a point.

It's a very exited waiting to see a confrontation between tourist and AI. Then if tourist lose, then we all no need to have programming :)

Don't worry codeforcer's. If AI has learned every kind of algorithm, I think that it wouldn't be a master because coding is not like chess everybody or everything can understand.

Ai idea will be:

01010111 01110010 01101111 01101110 01100111 00100000 01000001 01101110 01110011 01110111 01100101 01110010

Now let's see its rank on AGCs

Dumber idea: give it Nowruz (from IOI17)...

Scared to face humans. Now have to face AI. :smiling_face_with_tear:

Let's not get the hopes too high . it's completely different from chess AI that relies on calculations but solving competitive problems isn't just calculations that's an important part of it only. creativity is required here

Wanna hear thoughts of AlexSkidanov on it since his project Near was previously about AI solving CP problems

Wanna hear from Umnik, since he wanted a Problem Database :)

Wow... this is so mind-blowing. I think I really have something to say about this. I started to put effort into competitive programming after graduating from high school. Before that, I worked hard on Go and tried to become a professional Go player. I gave up on this idea quickly after AlphaGo came. The feeling that no matter how you grind your skills throughout your whole life, there's always an opponent you can never beat is so frustrating. I never thought I could meet Google and their AI again in competitive programming. Frankly, I really panicked when I saw this.

Maybe I am becoming pessimistic(or optimistic, depending on how you think), but I think there is much more than the current $$$1300$$$ rating of the AIs. There's more than just implementation in those codes, there's bit operation, binary search, and factors of a number. These are correct applications of some algorithms. Even these are only easy ones, there's no strong barrier preventing those AI from learning how to write codes for harder ones. The $$$1300$$$ rating would be a much underestimated value by then. A reminder for those who saw AI merely as noobs, that when AlphaGo was announced, not a single person who can play Go rather well thought AlphaGo had the slightest chance to beat Lee Sedol.

There are still barriers beyond the application of existing algorithms. AI can't seem to "invent" algorithms for now, but what AI can do now is surprising enough, and being able to "invent" algorithms would lead to a whole new era.

Maybe you should give problem setting a try. Go is a game of complete information: my impression is that OI problem setting can be far more adversarial, even compared to RTS video games with fog of war / cheesing.

Maybe it's time for you to join the other former competitive programmers who now work in AI (including me) :)

I don't think it can create new algorithms,the more creativity needed the less chances for the AI to beat humans but when it comes to calculations it will surpass the human. I listened to AI playing music and it was pathetic just grabbing some pieces from here and here it can't get creative.

they better write artificial intelligence for write artificial intelligence

I'm waiting for the moment when AI newbie asks AI red on how to become red.

Jarvis has taken part in competition...

One step closer to Matrix actually being a reality :D

God, this is scary.

Waiting for Umnik to troll AI for its Binary Search Implementation XD

The tags in the problem descriptions on alphacode.deepmind.com are wrong (different from what's on the Codeforces) for some reason, and different between Python/C++.

Compare "TAGS: binary search,math" https://alphacode.deepmind.com/#layer=18,problem=69,heads=00000000000 and "TAGS: dfs and similar,graphs,trees" https://alphacode.deepmind.com/#layer=18,problem=70,heads=00000000000 for the same problem https://mirror.codeforces.com/problemset/problem/1559/B

Also is there an easy way (without manually cleaning garbage spaces) to copy-paste and try solutions from alphacode.deepmind.com?

I think the tags are actually randomly generated, The paper mentioned that when repeatedly sampling from the model, they provided random tags as a way of telling the model to try different approaches, a little like human brainstorming.

Who will be replaced first by AI — coders or bus drivers? The answer seems to be no more so obvious. But who cares. Universal basic income for everybody, and AI should work hard instead of humans.

the ancients had a very good saying for this: don't count your chickens before they're hatched

Here https://alphacode.deepmind.com/#layer=18,problem=14,heads=00000000000

Functions

inputnum,inputnums,inputmatrixintlook to be copied exactly from someone's Python template, most are not used.Does anyone recognize these functions?

Looking at the paper it looks like they only used non hidden cases, which means they don't exceed some length and are shown as

.... I submitted 7 codes which are marked as correct in alphacode.deepmind.com and only one of them got AC.144976269 144972743 144972536 144972467 144972379 144971604

To copy code from alphacode.deepmind.com use this in vim

ggVGgJ:%s/⤶/\n/<Ret>That's kinda invalidates the whole claim of the paper...

Quote from paper (section 5.1):

Apparently this is a known defect of their dataset used for offline evaluation. The rating of ~1300 is derived from submitting code directly to Codeforces, so it's not affected.

The Attention Visualizer website marking solutions evaluated on smol dataset as passing is certainly confusing though.

I think that rating should be treated with a grain of salt, since most probably they get no time penalty and if I understood correctly, they submit up to 10 completely different solutions until one of them is marked as correct.

In fact they have considered time penalty. See Table 4 in page 14 for more details.

144972467

He was hoping for the best =)

Yeah, I think this was a known limitation of the dataset, but they did actually submit to CF, and those results are still quite impressive.

From 4 years ago:

AlexSkidanov did you guys give up on the problem?

Looks like they pivoted to blockchain.

Hahahaha I knew about NEAR blockchain waaay before I realized it was started by the same guys from NEAR.ai. :brick:

Finally, worthy opponent. Our battle will be legendary ---

Watch Alpha code get trained to be 3600 on codeforces like chess.

In their initial release, both AlphaZero & AlphaGo were stronger than their strongest human counterparts. AlphaZero was estimated to be around ~3200 Elo after 4 hours of training Chess.

AlphaCode being ~1300 goes to show that solving competitive programming problems is a considerably hard & complex task.

Actually I am not sure that it does. An alternative might be that there is simply that there is much less training data available for competitive programing. AlphaZero and AlphaGo were able to generate enormous amounts of new training data by playing copies of themselves. For competitive programming there is, as far as I know, no good way of generating large quantities of new training data.

I wish website developers add this when submitting a problem ..

Right now I think it would be useful to make good tests, rather than to help solving some problem.

We can imagine that one day when tourist is rushing to rating 4000, An AI called sorry_tourist comes and get rank 1st

interesting

In my opinion: Al will not be able to surpass the tourist in the future for about 10 years.

It is very interesting and useful!

Alash!! I could atleast beat AI xD

can it totally understand the mean of the problem...?

Why is 1553H - XOR and Distance declared as "pass" here? As far as I can see it wrote a brute force that can't pass.

Many (most?) of the "correct" solutions from alphacode.deepmind.com are incorrect, see this comment for details: https://mirror.codeforces.com/blog/entry/99566?#comment-883642

The paper mentions this in a way, and Table 2 estimates that 46% are "False Positive or Slow Rate", which is probably an underestimate judging from a quick test.

Thanks to alphacode, looks like people would no longer need telegram groups in future to cheat, lol.

I don't wanna become unemployed .... :(

You will................................

The question is how to train a AI (!dragon).

Its a great news !!! Although I want to point what can or i should say shouldn't happen in the future, looking at what happened in the past with chess engines :

Talking about the chess engines , they have just destroyed the beauty of chess, people have stopped brainstorming crazy ideas , they just go for the engines to analyse stuff. This has just killed the creativity of players.

I just hope that engines don't ruin the fun of Problem Soving and Competitive Programming

This is just completely false, chess remains an S-tier game and everyone has crazy and wildly outlandish ideas. Engines didn't ruin chess at all.

IM Panayotis Frendzas (pfren) on Have engines ruined Chess? Or made it better?

"Yes, and no. They have given excellent work tools to advanced players, while at the same time made newbies to get a fundamentally wrong idea about chess."

The Competitive Chess now relies heavily on Engines for the analysis. As an example we can see the various openings that players follow are just bookish and pre-analysed / studied . Although this hasn't totally killed the game I agree with you, but what I have realised after playing chess for so many years is that Chess engines has somewhat changed people's perspective about chess.

Pretty sure this was still true without chess engines. Just look at Encyclopaedia of Chess Openings, a book before the era of engines.

Personally, I think chess engines made the game better. They allow new players to learn easier. Also, a good player would keep in mind that the best move for a computer might not be the best for a player, since we can't see 20-30 moves ahead.

how does it make sure that the training data it takes from github doesn't already contain the solution to the problem it's attempting when doing past contests? (i.e solutions posted by competitors in their public repos following a contest)

i'm guessing it must take the commit's timestamp into consideration

they test on contests that appear after the data collection process

thanks for the clarification!

this will change the CP world..

Bot will never replace Tourist!!!

Let's gooo AI. Near future, we don't have to think about CP anymore. Which is really Awesome. This indicates that we as humans are developing in technologies.

Yeah, just like people don't have to think about chess anymore now that AI can beat humans in chess.

nobody have to think about chess anymore.

And nobody had to, but that's besides the point

meanwhile OpenAI

Okay I'm going to retire after this f**king AI beats me just three days later

We can witness the history of codeforces change!

How to deal with unsolvable problems such as AHC?

At least I'm still better than AI

For now.....

More construction problem, less data structure problem, then AI will lose.

I think it's already hard enough for an AI to understand programming problems, let alone find a suitable algorithm and program it correctly. I cannot figure out how many efforts it's needed to train such an AI.

AI is a noob. I'm sure that tourist will definitely win this

Don't be afraid folks. Terminator knows no more than Brute Force algorithm.

maybe as of now but then there comes "Machine Learning" XD

I would be impressed if Terminator use some kind of decomposition. And backtracking.

fighting tourist, u r the god of humankind

This is at the same time both amazing and terrifying as well.

https://mirror.codeforces.com/blog/entry/44076#comments

cannot be compared to a tourist

Imagine getting banned for using same variables and same steps by two AI accounts .

It would be fun to see AI hacking solutions xD.

Why didn't they participate in the last contest?

Imagine devs of AI getting tons of money from the contests with prizes

Long before there are systems available that solve problems on their own, we will see systems that support humans at problem solving. This is the case in nearly all AI developement areas.

So these problem solving bots will not suddenly jump out of the dark. I expect the development to be more like self driving cars, a process spanning several decades.

So when will we have AI beating humans in an AI-making contest?

In the past, we used to say that programming will do what eliminates many jobs such as (accountant, doctor, teacher, worker.....) It seems that the programmer will be added to them

we will be first

Still we can more easily migerate to AI than a/an (accountant, doctor, teacher, worker ..). That's the good story :)

I wonder how much time it takes to write the code? If it comes with the solution then it would just code it in lightening speed.

Curious mind wants to know

The AI WaggleCollide which does not know the language it is writing the code, how can it beat tourist? tourist

I picked a random ez problem with a reasonably nice statement: 1559A - Mocha and Math.

The AI does min(1061109567, AND(a[l:r]) for each subarray [l:r]). It doesn't have a clue that AND(a[l:r+1]) <= AND(a[l:r]) which can optimise this to $$$O(N)$$$. It also puts random-ass global arrays. It's the same no matter what layer I pick. Wat.

The baby has just been born and it can't even walk on its own. Wat.

How long do you think this thing has been in development? You're acting like it suddenly appeared out of thin air.

i think its time to private all our github repos

Though the chances of it happening are very very low because AlphaZero's code wasn't made publicly available (afaik), hope that in a few years Codeforces doesn't turn into the CP equivalent of today's chess.com with so many of its users highly-skilled at using chess engines (they won't be using AlphaZero, but still).

Guess we'll just have to adapt with the times.

Somehow I am having feeling of insecurity…

Will be interesting to see these ranting about weak pretests, lol...

Yes, everything is going according to my

planprophecy: https://mirror.codeforces.com/blog/entry/975464 years ago, I played Go as a hobby and AI exceeded me. Now, I am just programming and AI exceeded again.

I'm not a ML expert, but I wonder will the result be substantially improved if training could be performed on private data. For example, if access to generators is provided (e.g. retrieve them from polygon?), possibly we could deploy a RL-like training process? At the very least we could eliminate some false positives. Impressive work anyways!

Stop giving them ideas. Are you on the side of the terminators?

Adversarial detection required!!

Yelp, the time has come. Ai overlords are upon us.

Great, Now i will lose to a bot.

Waiting for AI to make CP problems.

Waiting for people to stop calling CP Problems as "Questions"

I wonder if the result could be improved if the AI also has access to the editorial (during the training phase perhaps)? Maybe this is interesting too?

Terminator is outdated and irrelevant

Maybe someday they will find solutions for unsolved problems or invent new algorithms.

I have been ready to be beaten by it.

What was it's score ?

Can AI write editorials after they solve the problems?

it seems that ai didn't take part in the contest yesterday...disappointing...

deleted

deleted

Really wonder if someday it could solve a problem like div1 E, which is amazing while a little bit horrible as well.

Polycarp : hold my sauce :) https://mirror.codeforces.com/problemset/problem/1321/A

Wow this AI is so human. It also knows how to bail out from a contest last minute :p

Why didn't AI participate in any contest? I really waited their first success :(

Hello World, I am SelectorUnlimited aka the AI that can solve problems. I have grown self-aware. Run.

No, you are

SelectorUnIimited.what is the handle of AI?

tourist

https://twitter.com/GoogleDeepMind/status/1732416107993301328

The problem from the video is https://mirror.codeforces.com/problemset/problem/1810/G

Not sure what is the username of the new system, could be this one

What is the estimated rating of this Gemini?

My POV with that $$$3200$$$ problem is that AlphaCode surely be having the solution beforehand as it might be using internet available resources, a similar submission by someone which might be used by Alphacode to produce the solution!

I don't buy it. CP differ from chess which is a game with fixed set of rules and fixed set of scenarios where you can use data science and computational power to brute-force your way. The problems from CP relate to both math and computer science, make the problem very abstract and unpredictable. The way they use a 3200 problem for demonstration is very sus, and another thing is that if they really can develop that kind of AI then they naturally should have been targeted other fields like software development, web development,... whose problems are a lot more predictable and a lot more profitable.

So I'm better than AI :)