The Introduction

On March 32nd of this year I created a blog post to share some new coding techniques that are quite easy to code and serve as very powerful optimizations (Context: https://mirror.codeforces.com/blog/entry/58667).

Now of course I know I single-handedly transformed the competitive programming meta as problems had to be done according to new standards now that it is possible to make O(N) code work O(1). Super fast. However, I have to share an upsetting discovery that I made. This blog post might be too sad for you, so discretion is advised. I have added certain checkpoints within this post where you can take a break if you get too sad and you may resume reading later. I have also added some cute pictures of animals to help out with your sadness.

The Story

It all started yesterday, when I used my usual O(1) trick to O(N) trick on problem E2 from the last contest (It seems that Mirzayanov has not adapted his problem statements to the trick, so his problems were quite easy. However maybe since this was a Div3 contest that was his intention). Now, it is easy to see that O(1) solution will easily pass within 3 seconds.

However, it did not: https://mirror.codeforces.com/contest/1005/submission/40208578

How is it possible to get a Time Limit Exceeded in O(1)? Clearly wrong answer, runtime error, memory limit whatever. These are all possibilities, but TLE? It makes no sense. Especially since the intended solution described in the editorial is O(N), and O(1) is so much faster. I even used Jolty Scanner, which literally makes TLE verdict impossible.

Anyway I dismissed this verdict. Maybe it was an issue with the servers being overloaded causing the judge to be slower. I said to myself I'll just submit the same code later, and it will surely work. So I went to the well known forum called reddit and started browsing the "programmerhumor" subreddit, where only the most super serious programmers have their most super serious discussions... Which may turn out to be the worst mistake I have ever made in my life.

A user (whose username will be omitted in order to protect him) answered the question I had in mind without me ever posting it, as if by divine providence. He made the preposterous claim that O(1) time is linear.

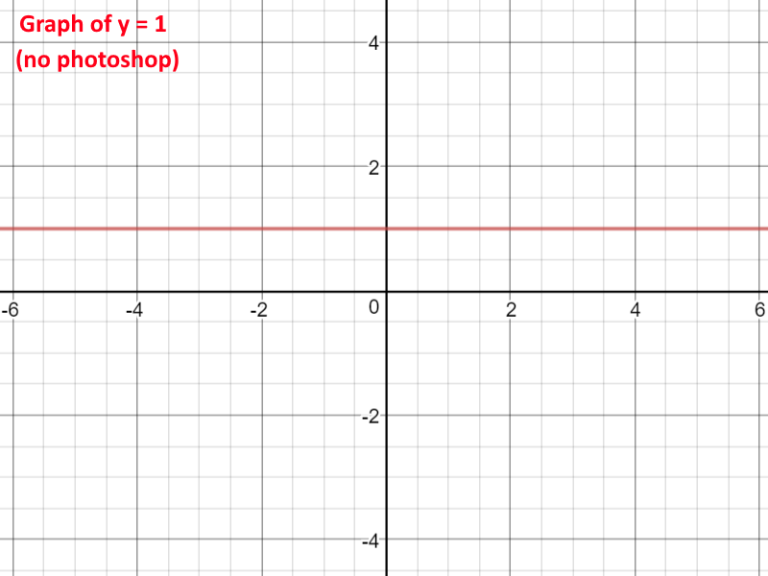

Because graphing y = 1 is a line.

Now surely that is ridiculous, right? y = 1 couldn't possibly be a line. y = n is a line, but not y = 1. y = 1 has to be a point. Otherwise, that means my O(N) to O(1) trick is wrong, because O(1) is O(N). And I cannot be wrong. In fact, I've never been wrong before, even about things I don't know. People would come ask me, "Sharon, does this cute girl love me?" and in my head I'm like, "How would I know, I don't even know who she is and I've never talked to her." But I would respond with either yes or no, and whatever I said ended up becoming true. Anyway the point is this reddit user is clearly wrong.

But the thought could not escape my mind. What if he was right? Then it made sense that I got TLE on that earlier problem. So I researched it using math and science to make the scariest of all discoveries. I went on my graphing calculator and...

I'm sure you understand the consequences of this and some of you are crying right now...

SADNESS BREAK

O(1) is O(N) + Apology + Consequence

I would now like to apologize for spreading false information... Though I was a victim of this as much as you were. I know you are all disappointed with me. I know you are all angry with me. But I can assure you that none of you are more disappointed and angry with me than I am with myself. This discovery has affected me at a deeply personal level, and I had to share it with Codeforces out of a burning desire to make things right in the world.

Now the fact that O(1) is equal to O(N), rather than it being the other way around, will have some dire consequences on computer science as a whole. It means we can't do things quite as fast as we could before, but if you stick around I have a solution for you later. However, there a problem that is potentially more problematic which could cause problems.

Consider the equation:

O(1) = O(N) //divide both sides by O( )

1 = N

Now consider the age old problem in computer science:

P =? NP //plug in value for N

P =? 1P

P = P

This pretty much confirms the P = NP problem. Every problem can now be solved in polynomial time. This is very problematic!!! Maybe you are thinking "Sweet, now I can solve TSP in O(N^2) or even O(N)!" I say maybe, but now people can hack your passwords in O(N)! Time to delete Facebook everyone.

For those who are very attached to their Facebook you can have a:

SADNESS BREAK

Anyway we must move onto biometric locks for our online accounts. Now I would like to present another trick, perhaps the best trick and solution that will fix the problem of not being able to code in O(1) anymore. Coders and codermen, I present to you:

The Binary Search Trick (The Compensation)

This blog post has been long and very mathematical, and this will be the last part, I promise. This trick will solve all the newly raised issues. Yes, we cannot solve in O(1) anymore. But we can get very very close. The fastest time we can use to compute something is O(logN). Everyone knows binary search works by dividing the range in half every time, which achieves a log base 2 of N runtime. Here is a typical binary search code, courtesy of geeksforgeeks:

// Returns index of x if it is present in arr[],

// else return -1

int binarySearch(int arr[], int x)

{

int l = 0, r = arr.length - 1;

while (l <= r)

{

int m = l + (r-l)/2; //THIS LINE IS THE MOST IMPORTANT!!!!!! NOTICE DIVISION BY 2

// Check if x is present at mid

if (arr[m] == x)

return m;

// If x greater, ignore left half

if (arr[m] < x)

l = m + 1;

// If x is smaller, ignore right half

else

r = m - 1;

}

// if we reach here, then element was

// not present

return -1;

}

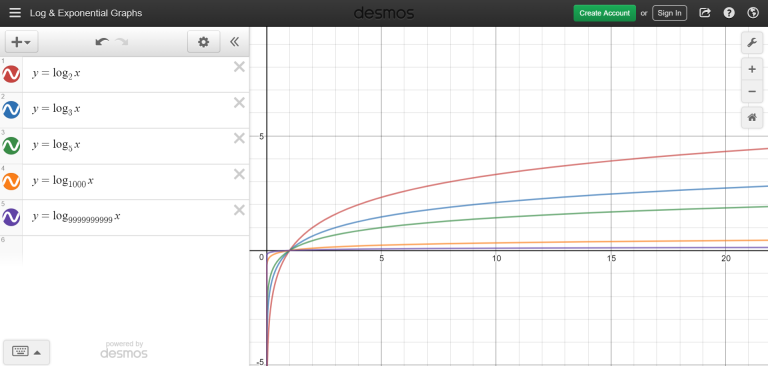

Take a look at line labelled as important above. What do you think would happen if instead of dividing by 2, we would divide instead by 3, or 5, or 1000, or 10000000, or 999999999999999999999999999999999999999999999999999999999999999999999999999999?

That is correct. It will terminate faster. In order to prove it we return to the graphing calculator.

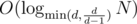

Notice how the amount of operations gets smaller for large N for logarithms of a higher base. This is pretty much all the proof we need to show that binary search can be improved upon. We don't write the base of a logarithm in Big O notation, which leads many rookie programmers to ignore the fact that large base logs run faster in practice than smaller bases. So yes, maybe O(1) is O(N), so we can't code in O(1) anymore. But we can get very close to true O(1) computation by using the binary search trick and dividing by a large number.

This blog post was longer than I intended it to be, but I am glad we managed to finish it on a bright note of hope for computer scientists worldwide. Thank you Codeforces. You have been an incredible community and I wish you all high rating. Ask me questions if you have any. I would love to answer them. I love algorithm analysis and I know I do an incredible job at it, and I would love to keep this discussion going so that we may learn from intellectual discourse.

-Sharon

I didn't use the first link in the post (cause it's broken) and spent so much time trying to seriously understand what the hell you talking about :D.

Whoops, thank you. I fixed it.

Auto comment: topic has been updated by Sharon (previous revision, new revision, compare).

32nd March !

That doggo tho <3

If you replace , not

, not  .

.

int m=l+(r-l)/2withint m=l+(r-l)/din functionbinarySearch, the run time will beWow such a genius!!

Why?

Woooooooooosh look at the joke go over your head

It's true that a higher base makes log smaller, but the trick you are presenting it's not true. Yes, the intervals of the binary search would be smaller, but the amount of intervals would increase too. A blog was posted in GeeksForGeeks to explain why dividing by 3 instead of 2 is not the best idea. The same idea can be applied to "n-ary search".

woosh

Is the world truly ready for such a revelation?